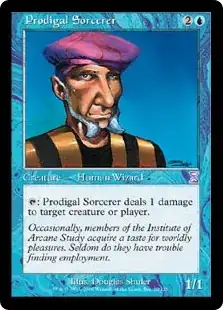

I'm making a piece of software that is able to recognize Magic the Gathering Cards from a picture or the actual camera.

The codebase scans for rectangular shapes (cards) in an image and calculates 64bit perceptual hashes for them, which get compared to a precompiled database of approximately 40.000 hashes for all the magic cards. The hashes are a perceptual hashing algorithm i can explain further (kinda like JPEG with DCT).

The database also gets compiled with an average hamming distance between all the individual card hashes and standard deviations for that. The average hamming distance is a difference of 24bits of the hashes and the standard deviation is around 5.3.

There are several cards in whole database, which only have 2 different bits when I compare. And a few with 1 bit difference. This means that it will be hard to discriminate all the cards from each other. It will be really hard to discriminate an actual picture of a card.

I'm wondering whether I could use other or better approaches to narrow down the potential candidates for recognition. I've considered a second step, perhaps with a bit of textrecognition, but it seems a bit cumbersome and probably not viable since textrecognition will be hard on blurry camerashots. I've considered using a database of hashes based on higher contrast images (currently the database is from original pictures from scryfall)

Do you guys have ideas for other algorithms or adjustments I could make for better discrimination between so many similliar objects?

Let me know if you want me to post the steps of the algorithm.