I'm sure this has been touched upon by a number of questions, but I'm struggling on drawing the boundaries between code, data and configuration versions when working with a large DAG (think airflow or Dagster).

There are a few things that change over time:

- The source data (data always changes over time)

- The code (code improvements, some logic changes)

- The environment (updating dependencies)

- The configuration (similar to code changes, but a configuration is more similar to an experiment than to a strict upgrade).

Each run of the DAG generates new assets.

My problem is: I am finding it hard to answer questions like

"how did the config change affect asset

x?""are the changes to asset

ydue to the data changes or the code changes? can we run the new code against the old data? Can we run the new data vs the old code?""can we hot swap asset

zfor the version from last week"

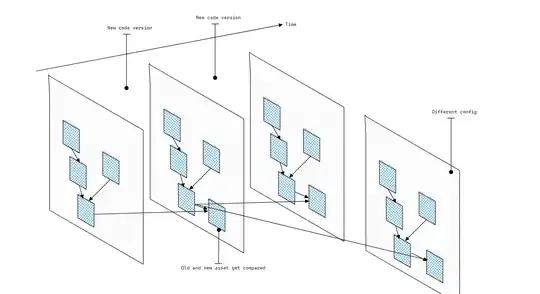

This is generating a very complicated mental model were all versions of the dag, present and past, and all their materialization reference each other.

Does anyone have guidance on how to manage this complexity?

EDIT: More thoughts

I guess the main challenge I have is tracking & referencing assets across dag runs.

It's all good to say "asset x depends on assets x1, x2, x3" but it's hard express cross-run dependencies in the pipelining tool itself.

DBT has this nice {this} variable that allows you to reference the current version of a table in the logic to update the table.