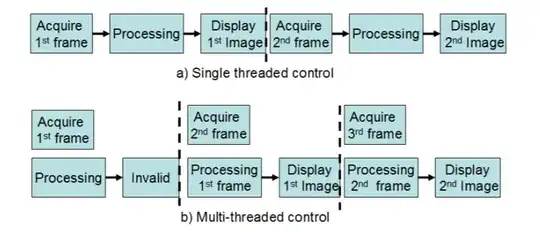

I hope I can explain my question clearly. I'm planning to do some real time 2D grayscale image acquisition, and after reading some articles, I noticed that many are using buffers or multithreading or even parallel processing. Below top shows how in single threaded control perform one task at a time and bottom shows how idle time also can be used to speed up image formation:

Basically through my program I need to send trigger signals to a line scan camera and receive these lines, process them and form an image frame and plot it in real time. Another way is to receive each frame at each trigger.

So lets say we have 1024 points in X and 1024 in Y direction on a plane to scanned. What could be an efficient method to to acquire the next frame when at the same time doing processing? Above claims achieving this using multithreading. There this paper about it but a bit long.

I want to use C# with async await since it will be a GUI. In that case do we still need threading or will the Task library take care of it? I appreciate of anyone has experience with such situation. An example mimicking the situation helps a lot.