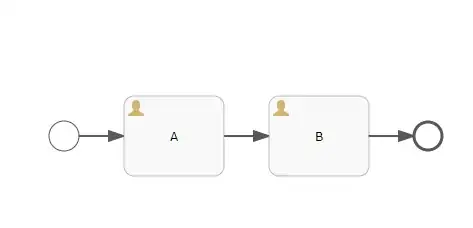

Your diagram does not show that such an event can happen, nor what are the consequences, e.g. go back to (A)? Cancel the workflow?

There are a number of case where such unexpected changes do not matter. For example:

when one actor changes some data (A) and a second actor verifies validity of changed data against an independent source (B). In this case (A) would flag the data as unreliable. Someone else could also change data: this would not influence the fact that data is unreliable and the verification step has to happen, to get back to a “clean/verified” state. A possible implementation of this scenario is just transactional consistency, and make sure that any invocation of (B) finishes if the state of the data is ok.

when it is assumed that every (A) will trigger its own (B), and if several independent (A) changes happen at the same time for the same data record,

each will trigger an independent (B). In fact, I’d assume this scenario when I read your diagram. A possible implementation of this is to use event sourcing with an event store. Every change of the data, as we know it, would lead to a separate event, and the event store would not store the latest status, but just the change of attributes in the state. This is more robust as the previous scenario, since you are free to design your event processing as suits the needs (e.g. ignore an event if later events make them obsolete).

If your architecture processes data records instead of events, you’d better disambiguate your diagram, to show that extra consistency checking is needed/performed. You could for example add an event on (B) (error event?) and clarify where such a situation would lead.

The underlying implementation could for example:

- just verify consistency of data, independently of any processing or chronology (if needs are simple and worries are only about consistency)

- use object versioning or time-stamping as suggested by Alexandr

- use an event id. With a saga pattern you’d use an event-queue (or an event-table). You could manage the chronology between respective A and B. In every record you could manage the latest event id processed. If events are numbered chronologically this would be very similar to the versioning.

Conclusion: Behind each of this scenario and solution, there are very different needs (consistency?sequential processing? latest processing only...) and very different architectures (event processing or not, event store, etc...). You need therefore to clarify the needs and context much more, to chose the most suitable approach.