In short

Yes, early crash is an advisable approach also for GUI, but at the same time, the robustness expectations being higher, one shall seek to minimize as much as psosible the risk of reaching an inconsistent state.

Some more arguments

Early crash in a GUI

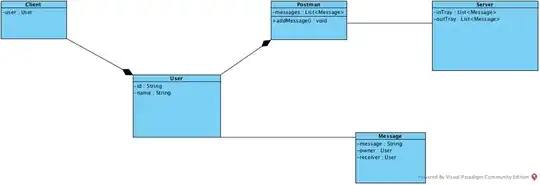

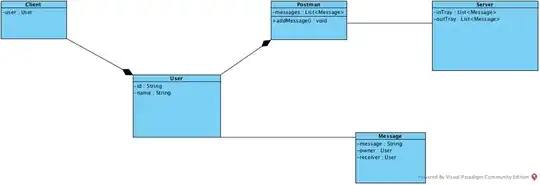

A GUI has the goal to make user’s life easy and the system understandable. When crashing, a GUI is no longer graphical and the user is lost. It failed its prime purpose. Here, for example, is an inviting cafeteria screen at an airport:

Nevertheless, if the system detects that it can no longer guarantee reliable operations (e.g. unexpected inconsistency, resources exhausted, etc...), and it can do nothing to revert to a safe situation, the best thing is indeed to terminate in a way to limit damage as much as possible. It's not only an advice of Andy Hunt’s pragmatic programmer, it's also an ethical principle: AVOID HARM.

Is it the only way?

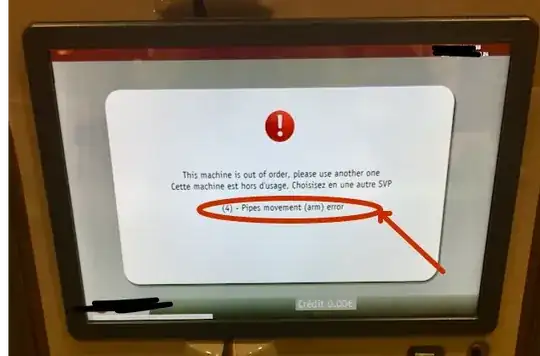

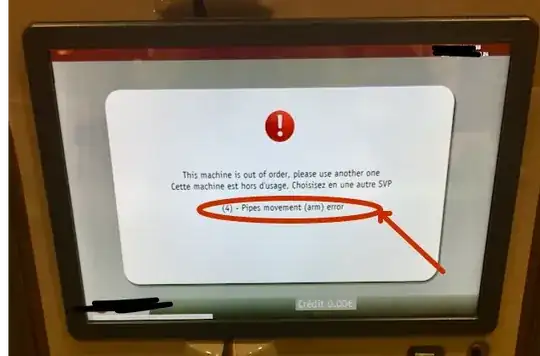

Before jumping prematurely to an easy early-crash solution, ask yourself critically how the detected inconsistency could be avoided in the first place, and if it is unavoidable how it can be recovered from. Here a more friendly recoverable action:

Instead of finding out that the pipe arm is not where it is supposed to be, it shows a useful error in a user friendly way. It does not crash. Depending on its design, it could retry, clean the current state and restart the arm control subsystem, or just give further instruction when the technician opens the device.

More robust systems

My point here is that the "crash early" is still valid, but it should not be considered in isolation, without at the same time considering making the system more robust.

How many so called "internal errors" are just bugs or consequences of poor practice: nowadays hackers still exploit buffer overflows, because it was forgotten to sanitize the input? Segmentation faults still happen because someone assumed memory allocation always works. Divide by zero still crashes because that defect sensor returned 0 Kelvins (that's -273°C: couldn't someone have checked that the parameter is in an acceptable range?).

Moreover, there are quite some errors that can be recovered: a function may raise an exception that is caught to limit damage; a module, a thread, a process, a subsystem may be killed/reinitialized/restarted. The system can even diagnose itself looking at performance stats to inform user preventively that the system is under unusually high load.

And there are systems that are not allowed to fail. In the 20th century, Margaret Hamilton saved the Apollo team by designing a priority-based scheduler that could cope with (unanticipated) capacity overload. If she had just written a crash early, some astronauts would not have made it back to Earth.

We are in the 21st century: every smartphone has 100 times the processing power of an Apollo space computer. A lot can be done to prevent a crash in the first instance.

Conclusion

So yes, consider the crash-early advice of the pragmatic programmer as valid. But please, consider in the same time at least some elementary defensive programming practices such as verifying parameters and verifying error status after a call. And maybe the higher robustness will require revising the architecture or some additional design, but your users will definitively value it.