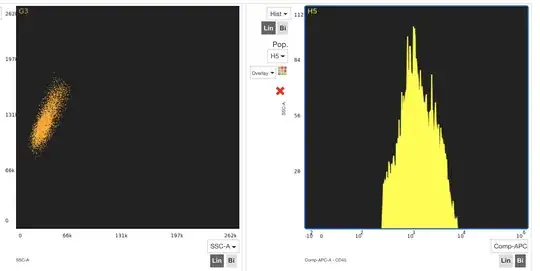

A few years ago, I wrote an application that allowed users to upload a file (it's a very specific file type) to a server. It then sends instructions to the server on how to visualise the data, and the server presents the data as either a dot plot graph, or a histogram graph. The PNG is constructed on the server side, and is streamed back to the user. Here's an example of 2 graphs produced by my application:

The technology I used was NodeJS and MongoDB. I'm now revisiting the application as I've received many requests from users to add new features, and some complaints about how slow it is to use.

There are a few issues with the stack. When a user wants to get a graph, a HTTP GET request is made to the server. Within that GET request, there is a lot of heavy computational work. The data is looped through, and the graph is constructed by figuring out the position of each data point on the graph. The most computationally heavy part is figuring out the position on the data-point on the graph. I won't go into it here, but each data point is run through a long formula to figure out the correct position (and for some files there can be over 1,000,000 data points). While all of this is happening (within the GET request), because I'm using Node, all other requests are now blocked (so my application can only handle one request at a time).

I'm looking for suggestions on how to re-architecture it to handle multiple requests concurrently and to draw the graphs quicker (presumably this is all about increasing CPU power). One high-level approach I'm thinking of is:

- User makes a request for a graph

- Node takes the request and notifies an AWS Lambda function (and HTTP request ends here) which does all the heavy computation work and produces a PNG

- PNG is streamed back to the user (this I'm not sure how to do).

All of this has to happen within a few seconds - the user is waiting for a graph to appear. I'm not sure if my suggested approach would be very user friendly as AWS Lambda needs time to start-up, and the user may be waiting around a long time for the graph.

Any suggestions would be greatly appreciated.