Load balancing in is a common topic, but there isn't as much said about load balancing out. I may have an application that can prepare 1mm requests/s but is unable to send them all out at that speed because of network restraints.

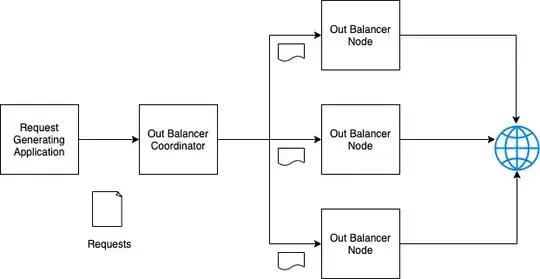

Is there a concept of an out-balancer? Are there any ready-made solutions out there that can take, say, a csv of 1mm requests and distribute them across nodes and send them?

The "Out Balancer Coordinator" could just be a queue. But that's getting into DIY territory where I'm wondering if something like this already exists.

Edit:

I see a lot of answers that describe queuing and processing pools. We do use queues and processing pools. To increase output we add more nodes to the processing pool. In that way we can scale up and down dynamically (albeit too slow for what we need) to meet throughput needs.

My question must not make much sense, and that's partly what I was wondering- if it made sense.

We use load balancers for traffic coming into the system, and with it don't need to worry about TLS termination, and many millions of connections can be consolidated into tens of thousands- allowing downstream servers to handle requests much quicker with less overhead.

I was looking for a similar service for traffic going out. I'd like to write applications that only need to generate requests, and let an "out-balancer" handle sending those requests as efficiently as possible (TLS and all). Instead of having 100 servers in-the-ready to ensure there is enough compute to make 1mm https requests per second, I could reduce that to 5-10.

Ideally, this "out-balancer" is a managed service somewhere else where I could pay for requests/s and wouldn't need to pay for an always-on server.

It is both a conceptual question as well as a real world question.