These are using these terms in a slightly different way, but they are trying to convey similar underlying ideas.

Here's a quote from the book you referenced (emphasis mine):

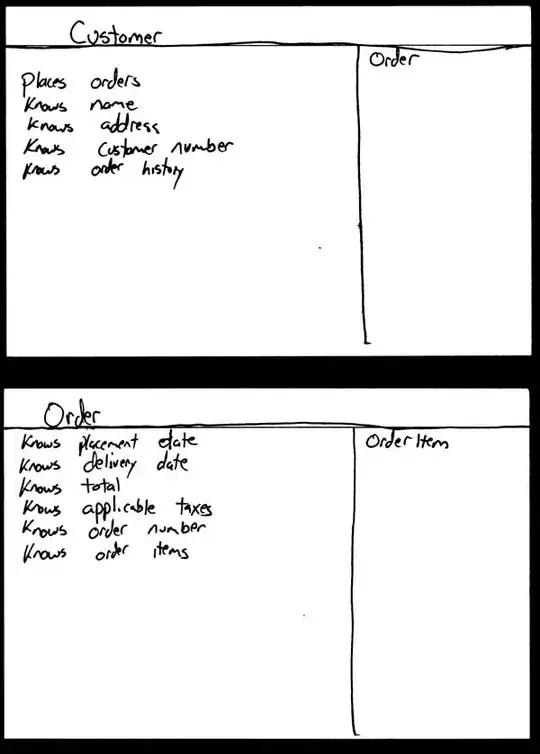

Going back to the ShoppingBasket example, you would expect this class

to have responsibilities such as

- add item to basket;

- remove item from basket;

- show items in basket.

This is a cohesive set of responsibilities [...]. It is cohesive

because all the responsibilities are working toward the same goal

[...]. In fact, we could summarize these three responsibilities as a

very high-level responsibility called "maintain basket".

Now, you could also add [... some other ...] responsibilities to the

ShoppingBasket [...]. But these responsibilities do not seem to fit

with the intent or intuitive semantics of shopping baskets. [...] It

is important to distribute responsibilities appropriately over

analysis classes to maximize cohesion within each class.

Finally, good classes have the minimum amount of coupling to other

classes.

They are essentially talking about achieving high cohesion and low coupling - the goal is for typical changes to be localized and low impact (e.g. in a single class, rather then spread out throughout the codebase, without cascading changes to seemingly unrelated code).

So they are saying that each class should have one "high level responsibility".

SRP captures a very similar set of ideas:

- Parnas - separate based on the kind of changes that are likely

- Dijkstra - the idea of separation of concerns

- the notions of coupling and

cohesion

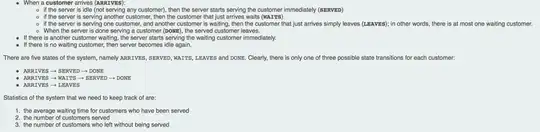

SRP originally stated that a software module should have one responsibility; Bob Martin later decided to clarify "responsibility" as "reason to change".

As with all the principles, their single-sentence statements are really a neat way to remember them and talk about them; but to understand them, you have to go beyond that and dig a bit deeper into the underlying ideas.

The primary point of software design and its principles is to have some control over complexity that arises over time due to change. If the code never changed, design wouldn't matter all that much.

In any domain, there are things that cause change. E.g. a business for which the software is being made might need certain features; but businesses have their own way of doing things, and they want the software to support that. One set of users might need one set of features, and another set of users (maybe from another department) might need a different set of features. They might have certain business processes already in place, and they might want the software to automate some parts of that - and if the developer team didn't spend enough time to understand those processes (how they do things) and their business (why they do things, how they think about concepts) the software is going to be conceptualized and structured/developed in a way that makes it hard to make the kinds of changes that they'll need - because the code will be based on the wrong set of abstractions (these are hard to change), and it will therefore be coupled in the wrong kinds of ways. You can't have code that supports all imaginable kinds of changes. You make it support certain kinds of changes by making it rigid for other kinds. Design is about tradeoffs. The trick is to be conscious/intentional about this.

You can think about these factors that drive the direction of change as the "forces of change", or "axes of change". These are often driven by people, organizations, their policies and politics, etc. That's where and how humans come into the picture. To quote Martin's article: "who must the design of the program respond to?"

The idea is, initially, to understand the domain enough to recognize some of these axes of change, and design around that. This will in part be based on domain analysis, in part on experience. It is important, however, not to over-engineer at this point: don't consciously make assumptions about domain aspects you don't yet have a good understanding of.

But it doesn't stop there. Since agile development is an iterative process, you'll be learning about the domain and the system as you go along. You'll discover that there are axes of change you didn't account for, or that some of the ones you anticipated are not so important because they rarely cause change in practice. Or that you've made some wrong assumptions, and that they now give you trouble. You have to decide when, where and how to restructure your code based on this new knowledge - in a "wait for the last responsible moment" fashion (before the coupling and complexity goes out of control). This is why we have heuristics like YAGNI and "wait for three examples of something before you abstract it or DRY it up". Doing this is more important in those parts of the codebase that have proven to change the most over time, in other parts less so.

To quote Bob Martin again (emphasis mine):

When you write a software module, you want to make sure that when

changes are requested, those changes can only originate from a single

person, or rather, a single tightly coupled group of people

representing a single narrowly defined business function. You want to

isolate your modules from the complexities of the organization as a

whole, and design your systems such that each module is responsible

(responds to) the needs of just that one business function.

This is why "responsibility" in SRP has to be defined in a somewhat abstract way - you have to discover how to partition the code into classes (or other kinds of components) for the specific application you are developing.