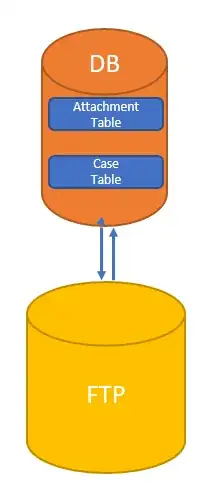

We are building a new application for a client to manage their cases. They are already using their existing system in which they are storing files associated to the cases in an FTP folder. There is an attachment table (~1M rows) which maps the cases with the ftp file location. As part of the migration the client also wants to move away from FTP to a cloud storage provider (Azure). Currently there is roughly 1TB of files in the FTP folder which we need to move to Azure.

Current architecture:

In the FTP there is no folder structure , they are just dumping the file and storing that link in the Attachment table. But in the Azure we would need to create a folder structure. Because of this we cannot just copy-paste the same files in Azure.

There are couple of approaches:

Option 1:

- Write a script in node.js which will read the case table, get all the assoicated rows from the attachment table for one case.

- Get the links from the attachment table.

- Make a ftp connection and get actual file using the links that were fetched from the previous step.

- Generate the folder structure in local system.

- Once all the files are retrieved then psuh the files into azure.

- Delete the folder structure

- Repeat the steps for the next Case.

Option 2:

- In this option we will run thru the same steps as before until 5.

- But we will not delete the folder structure instead we will build the folder strcuture for all the cases in the local machine.

- Deploy the files all at once into Azure.

It would really be helpful to understand what is the best approach that we can take? Are there any other approaches apart from the above.

Also, Option 1 could be run in parallel (multiple cases in one shot). What could be limitation in this? Option2 would required atleast 1.2 TB local space which is little hard to get considering the current logistical limitation in the company.