Real-time rendering pipelines are some of the most difficult things I've ever encountered in my career as far as design (though I also had the further requirements of users being able to program their own rendering passes and shaders). The implementation wasn't the most difficult but balancing the pros and cons of every possible design decision was one of the most difficult things I ever had to balance in my career because no rendering design decision discovered/published so far in the realm of real-time rendering is without huge glaring cons. They all tend to have big cons like the difficulty of transparency with deferred rendering or the multiplied complexity of combining forward and deferred rendering. I couldn't even find the equivalent of a one-size fits all oversized t-shirt which sorta fits me (willing to compromise a lot on fit) without it coming with like unicorns for a logo and flowers and glitter all over it.

I just wanted to start that off as a primer/caveat, if only as a means of consolation, because you're treading towards very difficult design territory regardless of what sort of language or architecture or paradigm you use if you're creating your own engine. If your engine gets more elaborate to the point of wanting to handle soft shadows, DOF, area lights, indirect lighting, diffuse reflections, etc, the design decisions are going to become increasingly difficult to make, and sometimes you have to kind of grit your teeth and go with one and make the best of it to avoid becoming paralyzed while reading endless papers and articles from experienced devs who all have different ideas while overwhelmed about what path to take. I ended up going with a deferred pipeline using stochastic transparency and a DSEL as a shading language (somewhat similar to Shadertoy's approach from Inigo Quilez though we worked on these things independently and before either of our products existed) that allows users and internal devs to program their own deferred rendering/shading passes which ended up turning into a visual, nodal programming language later on not too unlike Unreal's Blueprints. Now I'm already rethinking it all for voxel cone tracing and I suspect each generation of AAA game engines was predominantly prompted by desires to change the fundamental structure of the rendering pipeline.

Anyway, with that aside, there are a couple of things I suspect could help you with your immediate design problem, though they might require substantially rethinking some things. I don't mean to come off dogmatic except that I lack the expertise to compare all possible solutions, though it is not as though I didn't try some of the alternatives originally. So here goes:

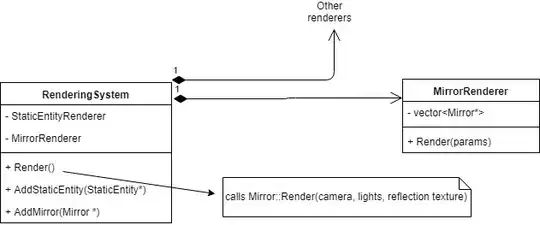

1. Don't tell the renderers what to render, especially in an ECS.

Let the renderers traverse the scene (or spatial index if one is available) and figure out what to render based on what they're supposed to render and what's available in the scene to render. This should eliminate some of the concern of how to pass the geometry (ex: planes) you need to render for a forward reflection pass. If the planes already exist in the scene (in some form, maybe just as triangles with a material marked as reflective), the renderer can query the scene and discover those reflective planes to render to an offscreen reflection texture/frame buffer. This does come with the con of coupling your renderer to your particular engine and scene rep or at least interface, but for real-time renderers, I think this is often a practical necessity to get the most out of it. As a practical example you can't necessarily expect UE 4 to render scene data from CryEngine 3 even with an adapter; real-time renderers are just too difficult and too cutting-edge (lacking standardization) to program in a way to generalize and decouple them to this degree.

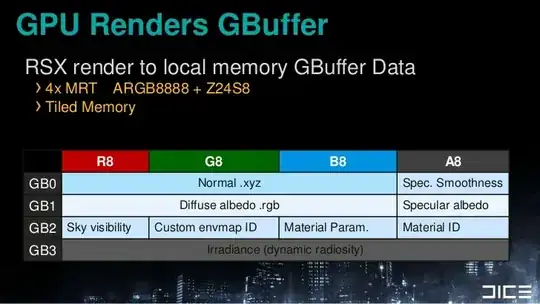

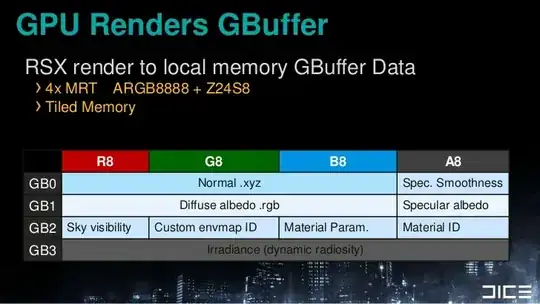

2. I think inevitably you have to hardcode the number of output buffers/textures you have for your particular engine and what they store. For deferred engines many devs go with a g-buffer representation like this:

Either way it simplifies things a tremendous deal to decide on what you need in a G-Buffer or analogical equivalent upfront (it might not be a compacted G-Buffer but just an array of textures of particular types to output/input, like "Diffuse" texture vs. "Specular/Reflection" vs. "Shadows" or whatever you need for your engine). Then each renderer can read from it and output whatever it needs before the next renderer might read some of this stuff and output to separate parts ultimately for it to all be composited to the final framebuffer output to the user. This way you sort of have a reflection (aka specular) texture data always available for renderers to input and output so that your reflection pass can just output to the relevant texture(s) and the subsequent renderer(s) and passes can input it/them and use it/them to render a combined result.

Lots of your current concerns seem to be with passing like special-case data in some exceptional use case from one renderer to the next or from the scene to the particular renderer, and that becomes simple and straightforward if every renderer just has access to all that data upfront it could possibly ever need without having to be uniquely passed the sort of data it specifically needs. That does violate some sound engineer practices to give each renderer such a wide access to disparate types of data, but with renderers the needs are so difficult to anticipate upfront that you tend to save more time just giving them access to everything they could possibly need upfront and let them read and write (though for writing just textures/G-Buffer) to whatever they need for their particular purpose. Otherwise you could find yourself as you are now just trying to figure out ways to pass all this data through the pipeline while constantly tempted to make changes both inside and outside these renderers to give them the needed inputs and feed the needed outputs to the next pass.