We had in our system in the past an external data provider (call it source) sending regular heartbeats to a java application (call it client). If the heartbeat failed, system shut itself down (to avoid serving stale data in a critical application). This was straightforward as both data and heartbeat used the same channel, making it highly reliable.

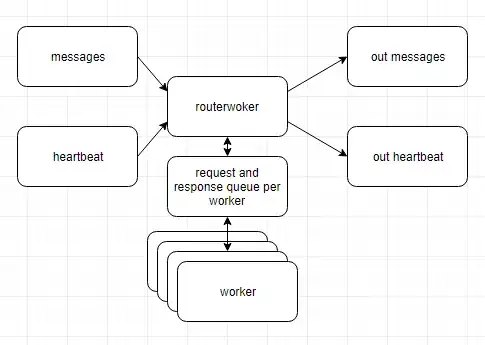

Since then we moved to a distributed system with java client broken down into several microservices and data flowing in part through kafka queues between services.

The important thing -- the most upstream system (call it destination) should still reliably get a heartbeat.

If we keep sending the heartbeat via a separate channel, then any failure in one of the microservices or kafka queue will disrupt the data flow to the destination, on the other hand, heartbeat will continue to flow without interruption -- failing the whole purpose of having a heartbeat

One solution I am thinking about is to push heartbeats through all of the services and kafka queues so that they take the same path as the data itself. In any case, what are the best patterns/design criteria for reimplementing heartbeat in such a distributed system?