A common pattern for locating a bug follows this script:

- Observe weirdness, for example, no output or a hanging program.

- Locate relevant message in log or program output, for example, "Could not find Foo". (The following is only relevant if this is the path taken to locate the bug. If a stack trace or other debugging information is readily available that’s another story.)

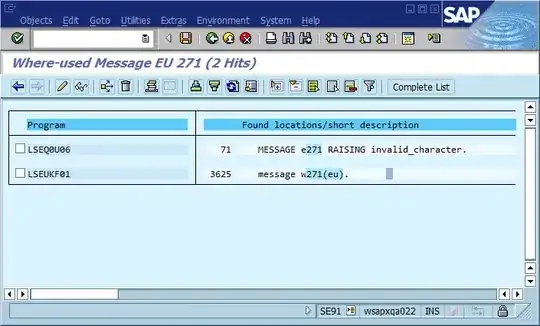

- Locate code where the message is printed.

- Debug the code between the first place Foo enters (or should enter) the picture and where the message is printed.

That third step is where the debugging process often grinds to a halt because there are many places in the code where "Could not find Foo" (or a templated string Could not find {name}) is printed. In fact, several times a spelling mistake helped me find the actual location much faster than I otherwise would - it made the message unique across the entire system and often across the world, resulting in a relevant search engine hit immediately.

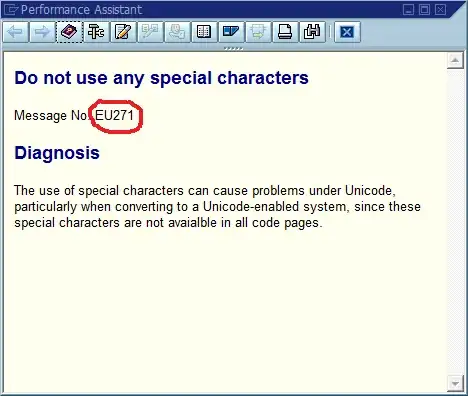

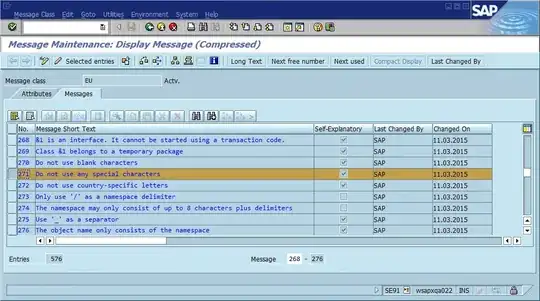

The obvious conclusion from this is that we should use globally unique message IDs in the code, hard coding it as part of the message string, and possibly verifying that there’s only one occurrence of each ID in the code base. In terms of maintainability, what does this community think are the most important pros and cons of this approach, and how would you implement this or otherwise ensure that implementing it never becomes necessary (assuming that the software will always have bugs)?