I am new to AWS and i have learnt and developed code in spark -scala .

My application basically merge two files in spark and created final output.

I read both files (MAIN files and INCR files )in spark from S3 bucket .

All are working fine and i am getting correct output also . But i dont know how to automate whole process to put in production .

Here are the steps the i am doing in order to get output .

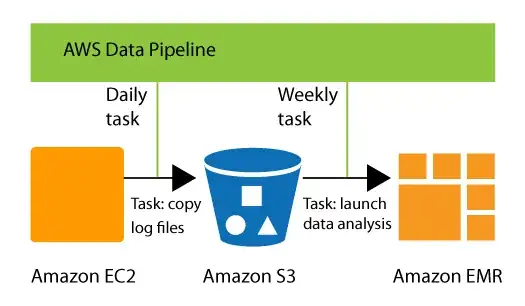

STEP 1: Loading Main files (5K text files ).I am reading files from FTP in EC2 and then uploading in the S3 bucket .

STEP 2: Loading INCR (incremental files) same way as i am loading MAIN files .

STEP 3: Creating EMR cluster manually from UI.

STEP 4: Opening Zeppelin note book and copy paste spark-scala script and run .

STEP 5: Again creating EC2 instance to read S3 bucket and send output files from S3 to FTP client .

I am using EC2 because in my case i dont have direct connect from S3 to FTP .We are in a process to get DIRECT CONNECT from AWS .

Please assist me how can i automate in a best way .