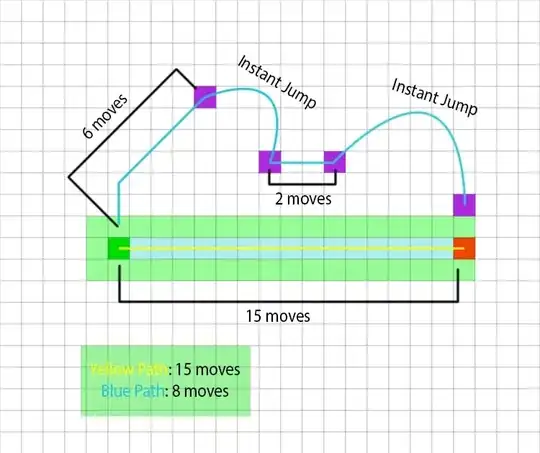

This is an example of what I want to do via code. I know you can use jump point search to easily get from the green node to the red node with no problems, or even A*. But how do you calculate this with warps.

In the image, you can see that it only takes 8 moves to get from the green node to the red node when taking the blue path. The blue path instantly moves your position from one purple node to the next. The space in the middle that costs 2 moves is a point between two warp zones that you must move to get to.

It is clearly faster to take the blue path, since you only need to move half (roughly) as far as the yellow path, but how do I do this programatically?

For the purpose of solving this problem, let's assume that there are multiple purple "warps" around the graph that you are able to use, AND we know exactly where each purple point will warp to, and where they are on the graph.

Some purple warps are bi-directional, and some are not, meaning, sometimes you can only enter a warp from one side, but not go back after warping.

I've thought about the solution, and only concluded that I would be able to calculate the problem by checking the distance to each warp point (minus the uni-directional points), and the difference between those points, and the points close to them.

The program would have to figure out somehow that it is more beneficial to take the second warp, instead of walking from the first jump. So, instead of moving 6 spots, then warping, then moving the remaining 8 steps by foot (which is also faster than not using warps at all), it would take the 6 moves, then the two moves to the second warp.

EDIT: I realized the blue path will actually take 12 moves, instead of 8, but the question remains the same.