I've found when developing research code (graph algorithms and sparse linear algebra for graduate work and in a corporate research lab), unit tests end up being invaluable.

When your algorithm performs worse than you expected, you need to be able to determine with confidence that it is an issue with the algorithm and not the implementation. If you don't do this, you may end up discarding promising research or worse publishing incorrect results.

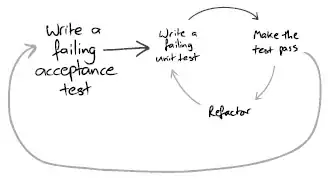

Test-driven development is for continually changing and incomplete specifications. By making sure your code works a the low-levels, you can re-organize it, change it, and re-use it. I've seen many graduate students throw away many months of work because they tried to save time not verifying that their code works.

Anytime you identify a function you want to write that has defined behavior (e.g., norm2(), calculateHitRate(), calculateResidual, etc.), write a unit test for it. Some of your unit tests may be of the form of sanity-checks rather than actual output validation, such as ensuring a matrix is symmetric or semi-positive definite. Basically make sure at each step of your algorithm, your data has the properties you expect. Remember that in TDD, writing the minimal code to pass the test does not imply to write incorrect code that passes the test, but rather to not write features that are not tested.

In research I think automated acceptance tests have much lower value, as you will be doing qualitative analysis of your results in many cases (figuring out why your algorithm preformed worse or better). If your algorithm results in a hit rate of 0.21 instead of 0.24, how will you know it is due to a bug, or just that your algorithm is not as good on that dataset as you hoped?