You're doing premature optimization.

Although, the question you linked claims that:

I know that I can override it by CSS, but that creates lots of overriden specifications, and that seems to highly affect the CPU usage when browsing the page.

[...]

It is a single static page with several hundred hidden <li> items that alternatively become displayed with a click of a button powered by Javascript. I comfirmed that the Javascript part is not using up the CPU much.

[...]

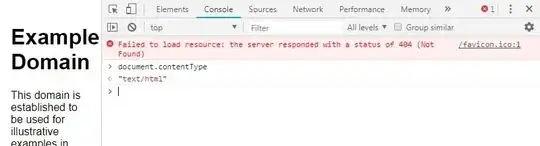

I am using Google Chrome CPU profiler and timeline for the particular page.

and is slightly more elaborate than the usual “I believe that here's the bottleneck, although I haven't profiled anything yet,” it may happen that:

- The person asking the original question had a flaw in the measurements,

- The person claimed he profiled the thing, but haven't really done anything,

- There was a bug in the version of Chrome from 2012,

- There was something very specific on the page, aside several hundred hidden list elements.

If it was a real performance analysis, I would expect the question to contain:

The detailed information about the experiment being done. What was measured? How? In which context? On which machines? Which operating system was used? Were the machines doing anything else?

The source code, allowing the test to be performed independently.

The actual measures.

A comparison between between the page where default style was reset with the same page where default style was kept intact.

A conclusion, such as: “Based on the collected data (see above), it appears that default style override caused additional 82 ms. on tested machines, which represents an average of 19% of the load time.”

Since the original question cannot be used as a relevant benchmark, you're only supposing that:

on this website normally microscopic increases in CSS performance will be bloated out to noticeable macroscopic jumps in performance.

and that CSS reset stylesheet would be the bottleneck. Let's see.

Macroscopic jumps in performance

I am creating a project-oriented website where users can create projects with millions of items (DOM nodes)

The fact that today's browsers are very capable of rendering a lot of stuff doesn't mean that you should cram a lot of stuff on a page. From design's perspective, this simply doesn't make sense.

Imagine looking at a million elements at once. A million numbers. A million images. A million words. Whatever. How does it look like? By comparison, a 1920×1080 monitor contains 2073600 pixels. Those are pixels, not data elements. Not words or numbers, or even chart points.

The fact is that if you're showing a few thousands of data elements, in order to be usable, those elements should be presented in specific forms, such as images or charts. This means that each of those elements won't have any DOM node, because you'll be using a canvas. For anything else, the number of elements which would be visible for a user would decrease to a few hundreds.

Of course, there are sometimes more DOM nodes than there are data elements displayed on a page. But having millions of DOM nodes? I don't believe it.

What about the scroll?

Indeed, pages do get scrolled up and down, which means that they sometimes contain more elements than the user has to see at once.

Note that while very long pages (long in terms of vertical scrolling) are usually less responsive, the relation between performance and number of DOM nodes on a page is not exactly linear. Browsers are smart enough to focus on the content which has to be displayed to the user, and do slightly less work for content which is not visible.

Nevertheless, you can get yourself in a situation where there is a huge amount of information to display on a single page. Luckily, there are two examples where this problem was successfully solved: Google Maps and Google's PDF viewer.

With Google Maps, the browser displays the content which is visible, and discards the content which is not. This has a benefit and a drawback. The benefit is that you can run Google Maps on a machine which has less than a few terabytes of memory. The drawback is that when you move from one location to another, the application should occasionally reload the data it discarded a few minutes ago.

With Google's PDF viewer, the approach is very similar. The entire PDF is rendered as a bunch of images and text, all using position="absolute". Every time you scroll the page, the app recomputes what should or should not be shown, and manually adjusts the top and left position of the elements. This makes it possible to effectively handle millions of DOM elements while ensuring outstanding responsiveness.

CSS impact

Still, for any large website with rich content, it would be wise to take a look at CSS impact, and once you've used a CDN, properly minified your static resources and implemented proper client-side caching mechanisms, there would be possibly a few CSS optimizations.

In general, those optimizations focus on:

- The number of styles,

- The number of elements targeted by a selector,

- The complexity of selectors.

And, indeed, you may notice that CSS reset stylesheets are among the ones you may want to optimize, since they contain a bunch of * { ... }.

Once you find that those selectors are an actual bottleneck of your entire application, you work on the selectors to either remove them when possible, or make them more specific. In both cases, it generally consists of a trade-off between performance and consistency and backwards compatibility. In other words, you may decide that it's not that important to have a consistent space between two elements between Chrome and Safari, or that you don't really care that one particular area of the web app is displayed incorrectly in Internet Explorer 9.

This is basically how it works.