I think this might be a controversial meta-answer, and I'm a bit late to the party, but I think it's very important to mention this here, because I think I know where you're coming from.

The problem with the way design patterns are used, is that when they are taught, they present a case like this:

You have this specific scenario. Organize your code this way. Here's a smart-looking, but somewhat contrived example.

The problem is that when you start doing real engineering, things are not quite this cut-and-dry. The design pattern you read about will not quite fit the problem you are trying to solve. Not to mention that the libraries you are using totally violate everything stated in the text explaining those patterns, each in its own special way. And as a result, the code you write "feels wrong" and you ask questions like this one.

In addition to this, I'd like to quote Andrei Alexandrescu, when talking about software engineering, who states:

Software engineering, maybe more than any other engineering discipline, exhibits a rich multiplicity: You can do the same thing in so many correct ways, and there are infinite nuances between right and wrong.

Perhaps this is a bit of an exaggeration, but I think this perfectly explains an additional reason why you might feel less confident in your code.

It is in times like this, that the prophetic voice of Mike Acton, game engine lead at Insomniac, screams in my head:

KNOW YOUR DATA

He's talking about the inputs to your program, and the desired outputs. And then there's this Fred Brooks gem from the Mythical Man Month:

Show me your flowcharts and conceal your tables, and I shall continue to be mystified. Show me your tables, and I won’t usually need your flowcharts; they’ll be obvious.

So if I were you, I would reason about my problem based on my typical input case, and whether it achieves the desired correct output. And ask questions like this:

- Is the output data from my program correct?

- Is it produced efficiently/quickly for my most common input case?

- Is my code easy enough to locally reason about, for both me and my teammates? If not, then can I make it simpler?

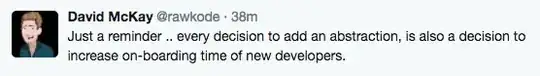

When you do that, the question of "how many layers of abstraction or design patterns are needed" becomes much easier to answer. How many layers of abstraction do you need? As many as necessary to achieve these goals, and not more. "What about design patterns? I haven't used any!" Well, if the above goals were achieved without direct application of a pattern, then that's fine. Make it work, and move on to the next problem. Start from your data, not from the code.