What a mother lode of a question! I might embarrass myself attempting this one with my quirky thoughts (and I would love to hear suggestions if I'm really off). But for me the most useful thing I've learned lately in my domain (which included gaming in the past, now VFX) has been to replace interactions between abstract interfaces with data as a decoupling mechanism (and ultimately reduce the amount of information required between things and about each other to the absolute barest of minimums). This might sound completely insane (and I might be using all sorts of poor terminology).

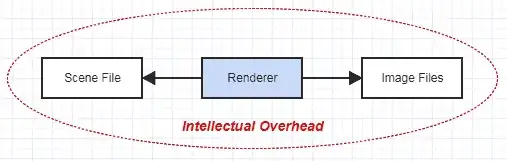

Yet let's say I give you a reasonably manageable job. You have this file containing scene and animation data to render. There's documentation covering the file format. Your only job is to load the file, render pretty images for the animation using path tracing, and output the results to image files. That's a pretty small scale application that's probably not going to span more than tens of thousands of LOC even for a pretty sophisticated renderer (definitely not millions).

You have your own little isolated world for this renderer. It isn't affected by the outside world. It isolates its own complexity. Beyond the concerns of reading this scene file and outputting your results to image files, you get to focus entirely on just rendering. If something goes wrong in the process, you know it's in the renderer and nothing else, since there is nothing else involved in this picture.

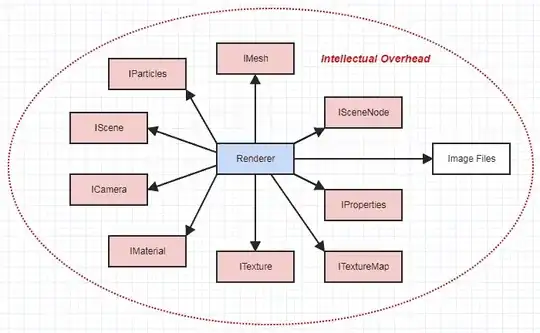

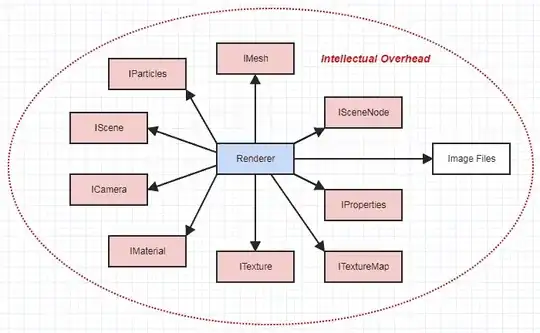

Meanwhile let's say instead that you have to make your renderer work in the context of a big animation software which actually does have millions of LOC. Instead of just reading a streamlined, documented file format to get at the data necessary for rendering, you have to go through all kinds of abstract interfaces to retrieve all the data you need to do your thing:

Suddenly your renderer is no longer in its own little isolated world. This feels so, so much more complex. You have to understand the overall design of a whole lot of the software as one organic whole with potentially many moving parts, and maybe even sometimes having to think about implementations of things like meshes or cameras if you hit a bottleneck or a bug in one of the functions.

Functionality vs. Streamlined Data

And one of the reasons is because functionality is much more complex than static data. There are also so many ways a function call could go wrong in ways that reading static data cannot. There are so many hidden side effects that could occur when calling those functions even though it's conceptually just retrieving read-only data for rendering. It can also have so many more reasons to change. A few months from now, you might find the mesh or texture interface changing or deprecating parts in ways that requires you to rewrite hefty sections of your renderer and keep up with those changes, even though you're fetching the exact same data, even though the data input to your renderer hasn't changed whatsoever (only the functionality required to ultimately access it all).

So when possible, I've found that streamlined data is a very good decoupling mechanism of a kind that really lets you avoid having to think about the entire system as a whole and lets you just concentrate on one very specific part of the system to make improvements, add new features, fix things, etc. It's following a very I/O mindset for the bulky pieces that make up your software. Input this, do your thing, output that, and without going through dozens of abstract interfaces end endless function calls along the way. And it's starting to resemble, to some degree, functional programming.

So this is just one strategy and it may not be applicable for all people. And of course if you're flying solo, you still have to maintain everything (including the format of the data itself), but the difference is that when you sit down to make improvements to that renderer, you can really just focus on the renderer for the most part and nothing else. It becomes so isolated in its own little world -- about as isolated as it could be with the data it requires for input being so streamlined.

And I used the example of a file format but it doesn't have to be a file providing the streamlined data of interest for input. It could be an in-memory database. In my case it's an entity-component system with the components storing the data of interest. Yet I've found this basic principle of decoupling towards streamlined data (however you do it) so much less taxing on my mental capacity than previous systems I worked on which revolved around abstractions and lots and lots and lots of interactions going on between all these abstract interfaces which made it impossible to just sit down with one thing and think only about that and little else. My brain filled to the brink with those previous types of systems and wanted to explode because there were so many interactions going on between so many things, especially when something went wrong in a completely different area than what I was trying to focus on between all these abstract function calls.

Decoupling

If you want to minimize how much larger codebases tax your brain, then make it so each hefty part of the software (a whole rendering system, a whole physics system, etc) lives in the most isolated world possible. Minimize the amount of communication and interaction that goes on to the barest of minimums through the most streamlined data. You might even accept some redundancy (some redundant work for the processor, or even for yourself) if the exchange is a far more isolated system that doesn't have to talk to dozens of other things before it can do its work.

And when you start doing that, it feels like you're maintaining a dozen small-scale applications instead of one gigantic one. And I find that so much more fun too. You can sit down and just work on one system to your heart's content without concerning yourself with the outside world. It just becomes inputting the right data and outputting the right data at the end to some place where other systems can get at it (at which point some other system might input that and do its thing, but you don't have to care about that when working on your system). Of course you still have to think about how everything integrates in the user interface, for example (I still find myself having to think about everything's design as a whole for GUIs), but at least not when you sit down and work on that existing system or decide to add a new one.

Perhaps I'm describing something obvious to people who keep up-to-date with the latest engineering methods. I don't know. But it wasn't obvious to me. I wanted to approach the design of software around objects interacting with each other and functions being called for large-scale software. And the books I read originally on large-scale software design focused on interface designs above things like implementations and data (the mantra back then was that implementations don't matter so much, only interfaces, because the former could be easily swapped out or substituted). It didn't come intuitively to me at first to think about a software's interactions as boiling down to just inputting and outputting data between huge subsystems that barely talk to each other except through this streamlined data. Yet when I started to shift my focus to designing around that concept, it made things so much easier. I could add so much more code without my brain exploding. It felt like I was building a shopping mall instead of a tower which could come toppling down if I added too much or if there was a fracture in any one part.

Complex Implementations vs. Complex Interactions

This is another one I should mention because I spent a good portion of my early part of my career seeking out the simplest implementations. So I decomposed things into the teeniest and simplest bits and pieces, thinking I was improving maintainability.

In hindsight I failed to realize I was exchanging one type of complexity for another. In reducing everything down to the simplest bits and pieces, the interactions that went on between those teeny pieces turned into the most complex web of interactions with function calls that sometimes went 30 levels deep into the callstack. And of course, if you look at any one function, it's so, so simple and easy to know what it does. But you're not getting much useful information at that point because each function is doing so little. You then end up having to trace through all sorts of functions and jump through all sorts of hoops to actually figure out what they all add up to doing in ways that can make your brain want to explode more than one bigger, more complex thing which centralizes and isolates its complexity instead of scattering it about all over the place.

That's not to suggest god objects or anything like that. But perhaps we don't need to dice up our mesh objects into the tiniest things like a vertex object, edge object, face object. Maybe we could just keep it at "mesh" with a moderately more complex implementation behind it in exchange for radically fewer code interactions. I can handle a moderately complex implementation here and there. I can't handle a gazillion interactions with side effects occurring who-knows-where and in what order.

At least I find that much, much less taxing on the brain, because it's the interactions that make my brain hurt in a large codebase. Not any one specific thing.

Generality vs. Specificity

Maybe tied to the above, I used to love generality and code reuse, and used to think the biggest challenge of designing a good interface was fulfilling the widest range of needs because the interface would be used by all sorts of different things with different needs. And when you do that, you inevitably have to think about a hundred things at once, because you're trying to balance the needs of a hundred things at once.

Generalizing things takes so much time. Just look at the standard libraries that accompany our languages. The C++ standard library contains so little functionality, yet it requires teams of people to maintain and tune with whole committees of people debating and making proposals about its design. That's because that little teeny bit of functionality is trying to handle the entire world's needs.

Perhaps we don't need to take things so far. Maybe it's okay to just have a spatial index that's only used for collision detection between indexed meshes and nothing else. Maybe we can use another one for other kinds of surfaces, and another one for rendering. I used to get so focused on eliminating these kinds of redundancies, but part of the reason was because I was dealing with very inefficient data structures implemented by a wide range of people. Naturally if you have an octree that takes 1 gigabyte for a mere 300k triangle mesh, you don't want to have yet another one in memory.

But why are the octrees so inefficient in the first place? I can create octrees that only take 4 bytes per node and take less than a megabyte to do the same thing as that gigabyte version while building in a fraction of the time and doing faster search queries. At that point some redundancy is totally acceptable.

Efficiency

So this is only relevant to performance-critical fields but the better you get at things like memory efficiency, the more you can afford to waste a bit more (maybe accept a bit more redundancy in exchange for reduced generality or decoupling) in favor of productivity. And there it helps to get pretty good and comfy with your profilers and learn about computer architecture and the memory hierarchy, because then you can afford to make more sacrifices to efficiency in exchange for productivity because your code is already so efficient and can afford to be a little less efficient even in the critical areas while still outperforming the competition. I've found that improving in this area has also allowed me to get away with simpler and simpler implementations, since before I was trying to compensate for my lack of skills in micro-efficiency with more and more complex algorithms and data structures (and the latter yields much, much more complex code than more straightforward data structures that are just really efficient with memory layouts and access patterns).

Reliability

This is kind of obvious but might as well mention it. Your most reliable things require the minimum intellectual overhead. You don't have to think much about them. They just work. As a result the bigger you grow your list of ultra reliable parts that are also "stable" (don't need to change) through thorough testing, the less you have to think about.

Specifics

So all of that above covers some general things that have been helpful to me, but let's move on to more specific aspects for your area:

In my smaller projects, it is easy to remember a mental map of how

every part of the program works. Doing this, I can be fully aware of

how any change will effect the rest of the program and avoid bugs very

effectively as well as see exactly how a new feature should fit into

the code base. When I attempt to create larger projects, however, I

find it impossible to keep a good mental map which leads to very messy

code and numerous unintended bugs.

For me this tends to be related to complex side effects and complex control flows. That's a rather low-level view of things but all the nicest-looking interfaces and all the decoupling away from the concrete to the abstract cannot make it any easier to reason about complex side effects occurring in complex control flows.

Simplify/reduce the side effects and/or simplify the control flows, ideally both. and you'll generally find it so much easier to reason about what much bigger systems do, and also what will happen in response to your changes.

In addition to this "mental map" issue, I find it hard to keep my code

decoupled from other parts of itself. For example, if in a multiplayer

game there is a class to handle the physics of player movement and

another to handle networking, then I see no way to have one of these

classes not rely on the other to get player movement data to the

networking system to send it over the network. This coupling is a

significant source of the complexity that interferes with a good

mental map.

Conceptually you have to have some coupling. When people talk about decoupling, they usually mean replacing one kind with another, more desirable kind (typically towards abstractions). To me, given my domain, the way my brain works, etc. the most desirable kind to reduce the "mental map" requirements to a bare minimum is that streamlined data discussed above. One black box spits out data that gets fed into another black box, and both completely oblivious about each other's existence. They're only aware of some central place where data is stored (ex: a central filesystem or a central database) through which they fetch their inputs, do something, and spit out a new output which some other black box might then input.

If you do it this way, the physics system would depend on the central database and the networking system would depend on the central database, but they wouldn't know a thing about each other. They wouldn't even have to know each other exist. They wouldn't even have to know that abstract interfaces for each other exist.

Lastly, I often find myself coming up with one or more "manager"

classes that coordinate other classes. For example, in a game a class

would handle the main tick loop and would call update methods in the

networking and player classes. This goes against a philosophy of what

I have found in my research that each class should be unit-testable

and usable independently of others, since any such manager class by

its very purpose relies on most of the other classes in the project.

Additionally, a manager classes orchestration of the rest of the

program is a significant source of non-mental-mappable complexity.

You tend to need something to orchestrate all the systems in your game. Central is maybe at least less complex and more manageable than like a physics system invoking a rendering system after it's done. But here we inevitably need some functions being called, and preferably they're abstract.

So you might create an abstract interface for a system with an abstract update function. It can then register itself with the central engine and your networking system can say, "Hey, I'm a system and here is my update function. Please call me from time to time." And then your engine can loop through all such systems and update them without hard-coding function calls to specific systems.

That allows your systems to live more in like their own isolated world. The game engine doesn't have to know about them specifically (in a concrete way) anymore. And then your physics system might have its update function called, at which point it inputs the data it needs from the central database for everything's motion, applies physics, then outputs the resulting motion back.

After that your networking system might have its update function called, at which point it inputs the data it needs from the central database and outputs, say, socket data to clients. Again the goal as I see it is to isolate each system as much as possible so that it can live in its own little world with minimal knowledge of the outside world. That's basically the kind of approach adopted in ECS that's popular among game engines.

ECS

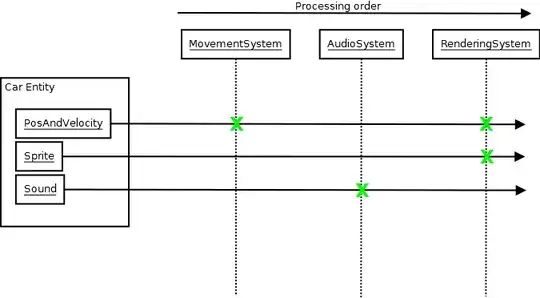

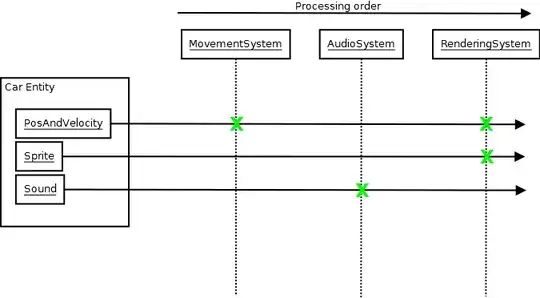

I guess I should cover ECS a little since a lot of my thoughts above revolve around ECS and trying to rationalize why this data-oriented approach to decoupling has made maintenance so much easier than the object-oriented and COM-based systems I've maintained in the past in spite of violating just about everything I held sacred originally that I learned about SE. Also it might make a lot of sense for you if you're into trying to build larger-scale games. So ECS works like this:

And as in the above diagram, the MovementSystem might have its update function called. At this point it might query the central database for PosAndVelocity components as the data to input (components are just data, no functionality). Then it might loop through those, modify the positions/velocities, and effectively output the new results. Then the RenderingSystem might have its update function called, at which point it queries the database for PosAndVelocity and Sprite components, and outputs images to the screen based on that data.

All the systems are completely oblivious about each other's existence, and they don't even need to understand what a Car is. They only need to know specific components of each system's interest that make up the data required to represent one. Each system is like a black box. It inputs data and outputs data with minimal knowledge of the outside world, and the outside world also has minimal knowledge of it. There might be some event pushing from one system and popping from another so that, say, the collision of two entities in the physics system can cause the audio to see a collision event that causes it to play sound, but the systems can still be oblivious about each other. And I've found such systems so much easier to reason about. They don't make my brain want to explode even if you have dozens of systems, because each one is so isolated. You don't have to think about the complexity of everything as a whole when you zoom in and work on any given one. And because of that, it's also very easy to predict the results of your changes.