I recently started to dive into CQRS / ES because I might need to apply it at work. It seems very promising in our case, as it would solve a lot of problems.

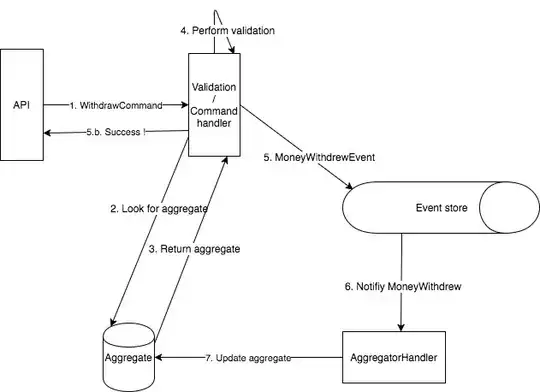

I sketched my rough understanding on how an ES / CQRS app should look like contextualized to a simplified banking use case (withdrawing money).

Just to sum up, if person A withdraws some money:

- a command is issued

- command is handed over for validation / verification

- an event is pushed to an event store if the validation succeeds

- an aggregator dequeues the event to apply modifications on the aggregate

From what I understood, the event log is the source of truth, as it is the log of FACTS, we can then derive any projection out of it.

Now, what I don't understand, in this grand scheme of things, is what happens in this case:

- rule: a balance cannot be negative

- person A has a balance of 100e

- person A issues a WithdrawCommand of 100e

- validation passes and MoneyWithdrewEvent of 100e event is emitted

- in the meantime, person A issues another WithdrawCommand of 100e

- the first MoneyWithdrewEvent did not get aggregated yet therefore validation passes, because the validation check against the aggregate (that has not been updated yet)

- MoneyWithdrewEvent of 100e is emitted another time

==> We are in an inconsistent state of a balance being at -100e and the log contains 2 MoneyWithdrewEvent

As I understand there are several strategies to cope with this problem:

- a) put the aggregate version id along with the event in the event store so if there is a version mismatch upon modification, nothing happens

- b) use some locking strategies, implying that the verification layer has to somehow create one

Questions related to the strategies:

- a) In this case, the event log is not the source of truth anymore, how to deal with it ? Also, we returned to the client OK whereas it was totally wrong to allow the withdrawal, is it better in this case to use locks ?

- b) Locks == deadlocks, do you have any insights about the best practices ?

Overall, is my understanding correct on how to handle concurrency ?

Note: I understand that the same person withdrawing two times money in such a short time window is impossible, but I took a simple example, not to get lost into details