I understand that there is a thread discussing a similar issue here:

How to efficiently search a set of vectors for a vector which is the closest match

But my problem is slightly different - and hopefully easier.

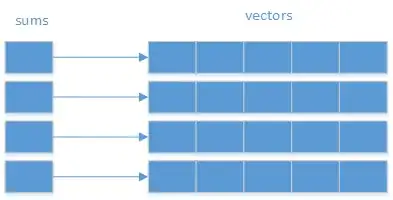

Given a set of vectors of the same dimension, e.g.,

[2, 3, 5, 9]

[12, 9, 2, 8]

[45, 1, 0, 1]

And a query vector [1, 4, 7, 2], I would like an algorithm that returns [2, 3, 5, 9] as the closest matching vector because it has the lowest sum of elementwise difference, i.e., (1-2) + (4-3) + (7-5) + (2-9) = -5

One way would be to pre-compute the pairwise 'similarity' but the computation is very heavy, given that I will have more than 5000 vectors so 250,000 comparisons.