I have run into similar problems in a different domain (VFX software) with similar temptations to use weak references all over the place to eliminate the massive GC logical leaks. In my case we were allowed to use weak references, but my problem was that I couldn't really get the internal team to abide by standards on how to use them properly let alone the third party developers using our software development kit to write plugins. I wasn't the lead of that project, just one of the developers involved who happened to know a bit about weak references in response to the problems we encountered with GC (which I didn't really care for in the first place).

So the solution I've found best and it is admittedly a bit biased by the particular type of people I was working with, the types of mistakes most commonly made, our specific use cases, and so forth was close to your #1 solution:

- Global entity storage Make a field in Server to store all Entity instances mapped by entity IDs.

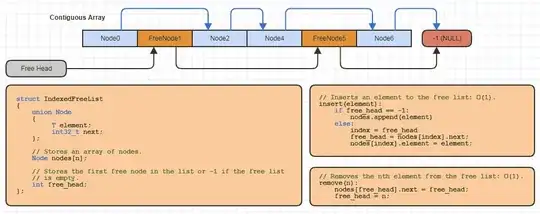

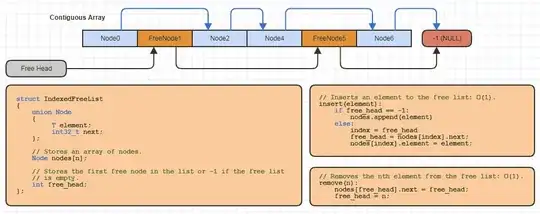

In my case the entities became stored in a central array and referred to by index into the array. To allow constant-time removal of any entity without invalidating indices to other elements, the array is simply allowed to have holes in it which are reclaimed upon subsequent insertions using a free list strategy, like so:

You can avoid having to treat entities as unions of the entity data itself or the next free index if you don't mind using a little extra memory to just store a stack of integers on the side for removed indices to reclaim. That's usually not too bad.

Explicit Destruction

What this effectively allows is explicit destruction of an entity:

destroy(entity_index);

Which is fantastic in my case. All the logical leaks were caused by trying to make destruction implicit, at which point a user might hit the delete button to remove a mesh from a scene and it doesn't actually get freed from memory because some obscure third party plugin failed to handle the event properly and set a reference to it to null. Now the third party plugin might simply crash as a result of failing to respond to the removal of the entity properly which is excellent for us -- better than spending hours and hours trying to investigate the source of these difficult-to-isolate, flying-under-the-radar leaks only to find it's not even in source code under our control.

If you do it this way, you do not expose the data types of the entities themselves to allow people to extend their lifetime. You expose a handle storing an index or something like that. If you want to work with handles that have functions in it, you can put methods in the handle classes themselves but internally they still store an index or ID or something like that to entity which isn't prolonging its lifetime.

This makes it so you can rest assured that when you do:

destroy(entity_index_or_handle);

... you can be confident that it will be destroyed and freed at the appropriate time (the next GC cycle, e.g.) and won't leak.

When to Destroy?

Now if you have concerns like when things should be destroyed, that's a separate concern if you ask me. First centralize the storage of entities so that outside parts of the system cannot extend their lifetimes. Then figure out whatever additional data structures and designs you need on top of that once you have entities capable of being destroyed explicitly.

The first step as I see it is to centralize ownership of entities to a single central container to allow explicit destruction/removal. That central container is the one and only place which stores strong references to the entities. If it's just an array you indexing, that's dirt cheap. Then, as a second step, you figure out where it makes the most sense in the design to destroy these things based on your level designs and so forth. That's something you can do on top of this now-centralized and more explicit memory management.

It allows you to get back that C-like control over explicitly freeing resources at the right time which tends to be less error-prone when it comes to memory leaks, but more error-prone when it comes to dangling pointer crashes, e.g. But it sounds like you are similar to me and work in a domain where something like a dangling pointer crash, trying to access a resource already destroyed, is preferable to a hard-to-detect memory leak, since the former might be quickly caught in testing while the latter might easily fly under the radar.

Multithreading

The one gotcha in my case is that in our designs at least, it made perfect sense to make destruction of entities explicit because it was usually in response to user input. The user selects something and hits delete, so it should now go away and be freed. We never had a case where it made sense from a high-level design standpoint for any resource to be shared in ownership by multiple things. The sole owner of entities was the "scene". If an entity is removed from the scene, it should be freed ASAP and not until the user starts removing other things or clearing the entire scene or shutting down the entire software because it's hogging up more and more memory the longer it is run.

However, the one exception where we want to allow the lifetime of an entity to be extended a little past that point is if there are still other threads processing the entity, and just for the local scope of that thread function until it's done doing whatever it's doing with that entity.

For this what I did is that the threads for the systems processing such entities run in phases/steps. They get a process event invoked repeatedly instead of running their own loops. There they might acquire and work with some entities for a short-lived period. If we attempt to do this:

destroy(entity_handle);

... while any one of the threads is in a "processing" phase, the removal of the entity is deferred until they are finished. It's a little bit of a "stop the world" kind of thing but the cleanup occurs very quickly. That allows the entities' lifetimes to be extended for very brief periods of time beyond the destroy request in multithreaded cases.

If such a design is not applicable in your case, then an alternative I contemplated is to use reference counting but a reference counting of a kind that extends an object lifetime beyond its normal period, like this:

// Entity will not be destroyed until we release.

entity_handle ent = extend_lifetime(entity_id);

if (ent.valid())

{

...

// Releases the entity to allow destruction.

release(ent);

}

Now what's different here is that people can still do:

destroy(ent);

There's still explicit destruction unlike normal ref-counting techniques, and an entity starts off with its reference count set to zero so that it can be destroyed immediately with those destroy calls (making the ref-counting an optional way to extend lifetimes beyond that explicit destruction). The difference is that we are allowed to optionally set the reference count past zero in short-lived threads, for example, to just prevent the destroy call from immediately destroying the entity until the thread releases it.

This passes a little more manual and slightly error-prone grunt work to threads outside of the main thread to explicitly extend the lifetime of objects beyond their normal scope (beyond a call to destroy). But it does allow such threads to kind of run however they want and whenever without having to execute their logic exclusively from thread processing events. Meanwhile if destroy is called outside of the main thread, then defer it (push it in a concurrent queue or something) for the main thread to process so that at least code in the main thread doesn't have to bother with this stuff.