I work on a large software programme - 100 developers in financial services.

The common wisdom of Continuous Integration is to get feedback early from your changes.

The common wisdom from Continuous Delivery is that by getting good at releasing small chunks, you reduce the risk of failure, because you can roll back easily - and so releasing small chunks helps you deliver to production rapidly and often.

A business value diagram in Lean allows us to see the flow of business value from left to right (similar to a production line) and from this you can identify where your change items are getting stuck, and where the bottlenecks in the process are.

The challenge in software development is identifying precisely what the widgets on the production line are.

If you read The Phoenix Project, then the changes are the change records flowing through the system(although this is heavily IT infrastructure focused). If you talk to a Scrum master - then the changes flow through the system are stories. If you talk to a developer, then the changes that flow through the system are GIT commits. (Which can and should align to stories).

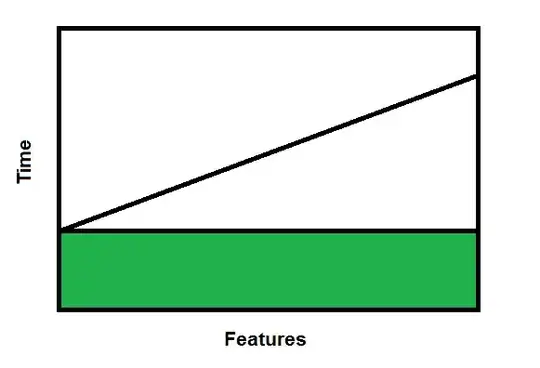

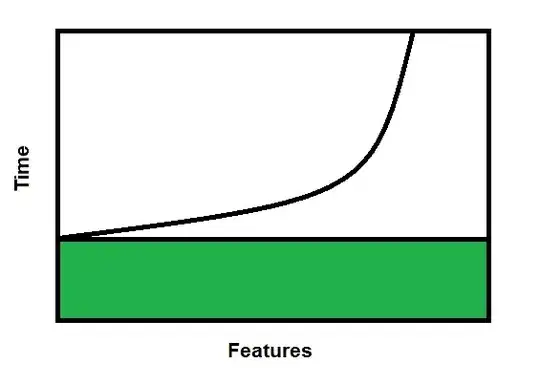

The simple reality is that we do small releases once a month, and large releases once every three months, due to the transaction cost of the regression test. (Don Reinersen's book The Principles of Product Development Flow is amazing on the tradeoffs of cycle time and transaction cost.

So in trying to identify the constraints on the system - instead of finding a work area where the items are piling up - to me it seems that the batch size itself is a constraint. By batch size, I mean the number of deliverables in a release. A release every month with a large number of developers would have a large number of stories/commits. I'm trying to quantify this.

We know that the economics of batch size is a u-curve optimisation problem, and that the transaction cost of a regression test and release is substantial.

My question is: How can I determine the optimal release frequency for maximum throughput?