One of the reasons is that the application domain and the users might not use these standards themselves. Even when some domains use some standards, some of them might have made different choices than the ISO standards, often for historical reasons.

If your users already use "UK" in their existing procedures(1) to refer to "United Kingdom of Great Britain and Northern Ireland", it doesn't necessarily make sense to use "GB" in their data structures (especially if what you mean by country isn't quite an "ISO" country, e.g. separating UK nations or having subtle differences with the Channel Islands and so on). Of course, you could have a mapping between internal storage a presentation, but sometimes, it's a bit over the top. You're rarely programming for the sake of programming, you often have to adapt to your environment.(2)

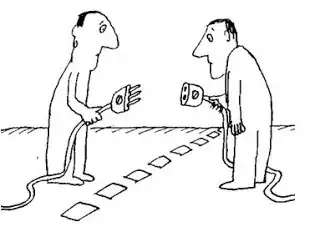

You also have to remember that these standards have evolved in parallel with software. You often have to develop within the context of other pieces of software, some of which may be imperfectly designed, some of which may still be affected by legacy decisions.

Even if you look at internal data storage formats, some ambiguities are hard to resolve. For example, as far as I know, Excel uses a decimal number to represent timestamps: it uses an integer as the number of days since a reference date, then what's after the decimal represents the fraction of the 24 hours to give you the hour... The problem is that this prevents you from taking into account time zones or daylight saving time (23h or 25h in a day), and Excel will convert any date/time to that internal format by default. Whether you want to use the ISO format or not becomes irrelevant if another piece of software you have to work with doesn't leave you a choice.

(1) I don't mean "programming procedures" here.

(2) Don't ask me why people don't use those standards in their daily lives either. I mean YYYYmmdd is clear, dd/mm/YYYY is clear, but ordering a date with medium, small, big order of granularity like mm/dd/YYYY, that just doesn't make sense :-) .