I think it would be more helpful for the questioner to have a more differentiated answer, because I see several unexamined assumptions in the questions and in some of the answers or comments.

The resulting relative runtime of shifting and multiplication has nothing to do with C. When I say C, I do not mean the instance of a specific implementation, such as that or that version of GCC, but the language. I do not mean to take this ad absurdum, but to use an extreme example for illustration: you could implement a completely standards compliant C compiler and have multiplication take an hour, while shifting takes milliseconds - or the other way around. I am not aware of any such performance restrictions in C or C++.

You may not care about this technicality in argumentation. Your intention was probably to just test out the relative performance of doing shifts versus multiplications and you chose C, because it is generally perceived as a low level programming language, so one may expect its source code to translate into corresponding instructions more directly. Such questions are very common and I think a good answer should point out that even in C your source code does not translate into instructions as directly as you may think in a given instance. I have given you some possible compilation results below.

This is where comments that question the usefulness of substituting this equivalence in real-world software come in. You can see some in the comments to your question, such as the one from Eric Lippert. It is in line with the reaction you will generally get from more seasoned engineers in response to such optimizations. If you use binary shifts in production code as a blanket means of multiplying and dividing, people will most likely cringe at your code and have some degree of emotional reaction ("I have heard this nonsensical claim made about JavaScript for heaven's sake.") to it that may not make sense to novice programmers, unless they better understand the reasons for those reactions.

Those reasons are primarily a combination of the decreased readability and futility of such optimization, as you may have found out already with comparing their relative performance. However, I do not think that people would have as strong of a reaction if substitution of shift for multiplication was the only example of such optimizations. Questions like yours frequently come up in various forms and in various contexts. I think what more senior engineers actually react to so strongly, at least I have at times, is that there is potential for a much wider range of harm when people employ such micro-optimizations liberally across the code base. If you work at a company like Microsoft on a large code base, you will spend a lot of time reading other engineers' source code, or attempt to locate certain code therein. It may even be your own code that you will be trying to make sense of in a few years' time, particularly at some of the most inopportune times, such as when you have to fix a production outage following a call you received being on pager duty on a Friday night, about to head out for a night of fun with friends … If you spend that much time on reading code, you will appreciate it being as readable as possible. Imagine reading your favorite novel, but the publisher has decided to release a new edition where they use abbrv. all ovr th plc bcs thy thnk it svs spc. That is akin to the reactions other engineers may have to your code, if you sprinkle them with such optimizations. As other answers have pointed out, it is better to clearly state what you mean, which is using the operation in code that is semantically closest to what you are trying to express in idea.

Even in those environments, though, you may find yourself solving an interview question where you are expected to know this or some other equivalence. Knowing them is not bad and a good engineer would be aware of the arithmetic effect of binary shifting. Note that I did not say that this makes a good engineer, but that a good engineer would know, in my opinion. In particular, you may still find some manager, usually towards the end of your interview loop, who will grin broadly at you in anticipation of the delight to reveal this smart engineering "trick" to you in a coding question and prove that he/she, too, used to be or is one of the savvy engineers and not "just" a manager. In those situations, just try to look impressed and thank him/her for the enlightening interview.

Why did you not see a speed difference in C? The most likely answer is that it both resulted in the same assembly code:

int shift(int i) { return i << 2; }

int multiply(int i) { return i * 2; }

Can both compile into

shift(int):

lea eax, [0+rdi*4]

ret

On GCC without optimizations, i.e. using the flag "-O0", you may get this:

shift(int):

push rbp

mov rbp, rsp

mov DWORD PTR [rbp-4], edi

mov eax, DWORD PTR [rbp-4]

sal eax, 2

pop rbp

ret

multiply(int):

push rbp

mov rbp, rsp

mov DWORD PTR [rbp-4], edi

mov eax, DWORD PTR [rbp-4]

add eax, eax

pop rbp

ret

As you can see, passing "-O0" to GCC does not mean that it will not be somewhat smart about what kind of code it produces. In particular, notice that even in this case the compiler avoided the use of a multiply instruction. You can repeat the same experiment with shifts by other numbers and even multiplications by numbers that are not powers of two. Chances are that on your platform you will see a combination of shifts and additions, but no multiplications. It seems like a bit of a coincidence for the compiler to apparently avoid using multiplications in all those cases if multiplications and shifts really had the same cost, does it not? But I do not mean to supply supposition for proof, so let us move on.

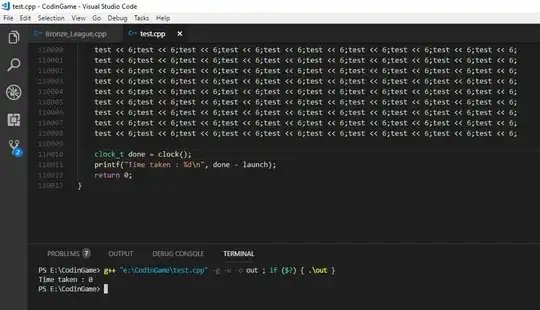

You could rerun your test with the above code and see if you notice a speed difference now. Even then you are not testing shift versus multiply, as you can see by the absence of a multiplication, though, but the code that was generated with a certain set of flags by GCC for the C operations of shift and multiply in a particular instance. So, in another test you could edit the assembly code by hand and instead use a "imul" instruction in the code for the "multiply" method.

If you wanted to defeat some of those smarts of the compiler, you could define a more general shift and multiply method and will end up with something like this:

int shift(int i, int j) { return i << j; }

int multiply(int i, int j) { return i * j; }

Which may yield the following assembly code:

shift(int, int):

mov eax, edi

mov ecx, esi

sal eax, cl

ret

multiply(int, int):

mov eax, edi

imul eax, esi

ret

Here we finally have, even on the highest optimization level of GCC 4.9, the expression in assembly instructions you may have expected when you initially set out on your test. I think that in itself can be an important lesson in performance optimization. We can see the difference it made to substitute variables for concrete constants in our code, in terms of the smarts that the compiler is able to apply. Micro-optimizations like the shift-multiply substitution are some very low-level optimizations that a compiler can usually easily do by itself. Other optimizations that are much more impactful on performance require an understanding of the intention of the code that is often not accessible by the compiler or can only be guessed at by some heuristic. That is where you as a software engineer come in and it certainly typically does not involve substituting multiplications with shifts. It involves factors such as avoiding a redundant call to a service that produces I/O and can block a process. If you go to your hard disk or, god forbid, to a remote database for some extra data you could have derived from what you already have in memory, the time you spend waiting outweighs the execution of a million instructions. Now, I think we have strayed a bit far from your original question, but I think pointing this out to a questioner, especially if we suppose someone who is just starting to get a grasp on the translation and execution of code, can be extremely important to address not just the question itself but many of its (possibly) underlying assumptions.

So, which one will be faster? I think it is a good approach you chose to actually test out the performance difference. In general, it is easy to be surprised by the runtime performance of some code changes. There are many techniques modern processors employ and the interaction between software can be complex, too. Even if you should get beneficial performance results for a certain change in one situation, I think it is dangerous to conclude that this type of change will always yield performance benefits. I think it is dangerous to run such tests once, say "Okay, now I know which one's faster!" and then indiscriminately apply that same optimization to production code without repeating your measurements.

So what if the shift is faster than the multiplication? There are certainly indications why that would be true. GCC, as you can see above, appears to think (even without optimization) that avoiding direct multiplication in favor of other instructions is a good idea. The Intel 64 and IA-32 Architectures Optimization Reference Manual will give you an idea of the relative cost of CPU instructions. Another resource, more focused on instruction latency and throughput, is http://www.agner.org/optimize/instruction_tables.pdf. Note that they are not a good predicator of absolute runtime, but of performance of instructions relative to each other. In a tight loop, as your test is simulating, the metric of "throughput" should be most relevant. It is the number of cycles that an execution unit will typically be tied up for when executing a given instruction.

So what if the shift is NOT faster than the multiplication? As I said above, modern architectures can be quite complex and things such as branch prediction, caching, pipelining, and parallel execution units can make it hard to predict the relative performance of two logically equivalent pieces of code at times. I really want to emphasize this, because this is where I am not happy with most answers to questions like these and with the camp of people outright saying that it is simply not true (anymore) that shifting is faster than multiplication.

No, as far as I am aware we did not invent some secret engineering sauce in the 1970's or whenever to suddenly annul the cost difference of a multiplication unit and a bit shifter. A general multiplication, in terms of logical gates, and certainly in terms of logical operations, is still more complex than a shift with a barrel shifter in many scenarios, on many architectures. How this translates into overall runtime on a desktop computer may be a bit opaque. I do not know for sure how they are implemented in specific processors, but here is an explanation of a multiplication: Is integer multiplication really same speed as addition on modern CPU

While here is an explanation of a Barrel Shifter. The documents I have referenced in the previous paragraph give another view on the relative cost of operations, by proxy of CPU instructions. The engineers over at Intel frequently seem to get similar questions: intel developer zone forums clock cycles for integer multiplication and addition in core 2 duo processor

Yes, in most real-life scenarios, and almost certainly in JavaScript, attempting to exploit this equivalence for performance's sake is probably a futile undertaking. However, even if we forced the use of multiplication instructions and then saw no difference in run-time, that is more due to the nature of the cost metric we used, to be precise, and not because there is no cost difference. End-to-end runtime is one metric and if it is the only one we care about, all is well. But that does not mean that all cost differences between multiplication and shifting have simply disappeared. And I think it is certainly not a good idea to convey that idea to a questioner, by implication or otherwise, who is obviously just starting to get an idea of the factors involved in the run-time and cost of modern code. Engineering is always about trade-offs. Inquiry into and explanation as to what tradeoffs modern processors have made to exhibit the execution time that we as users end up seeing may yield a more differentiated answer. And I think a more differentiated answer than "this is simply not true anymore" is warranted if we want to see fewer engineers check in micro-optimized code obliterating readability, because it takes a more general understanding of the nature of such "optimizations" to spot its various, diverse incarnations than simply referring to some specific instances as out of date.