I have a problem visualising how the gap is closed between coarse-grained, n-tier boundary, high level, automated acceptance testing and lower level, task/sub-task scope Unit Testing.

My motivation is to be able to take any unit test in my system and be able to follow backwards, through coverage, which scenario(s) make use of that unit test.

I am familiar with the ideas of BDD: An extension of TDD and writing scenarios at the high level solution design level in the Gerkin format, and driving these scenarios using tools such as JBehave and Cucumber.

Equally, I am familiar with the humble unit test at the utility or task level, and using xUnit to test through the various paths of the function.

Where I begin to come unstuck, then, is when I try to imagine these two disciplines applied together in an enterprise setting.

Perhaps I am going wrong because you tend to use one approach or the other?

In an attempt to articulate my thoughts so far, let's assert that I am right to believe that both are used.

In an agile world, user stories are broken down into tasks and sub-tasks, and in an enterprise setting, where the problems are large and the number of developers per story are many, those tasks and sub-tasks are distributed amongst a team of people with specialist problem solving skills.

Some developers, for example, may specialise in mid-tier Development, develop a controller layer and continue to work downwards. Others may specialise in UI development and work from a controller layer upwards.

Developers may therefore share implementation of a single string of tasks which, when glued back together again, will implement the story.

In this case, it seems to me that cycles of TDD at the task and sub-task level is still necessary, to ensure that the efforts of these developers collaborate correctly within one layer and from one layer to the next (mocking collaborators).

Working in this way implies a certain amount of high level solution design up-front, so contracts between layers are agreed and respected. Perhaps this is articulated as a sequence diagram and perhaps this emerges during the task breakdown session for the story.

Yet, on the other hand, we have the value of defining the BDD acceptance tests at a higher level to configure that all of the blocks work together as expected - a form of super Integration Test, I suppose.

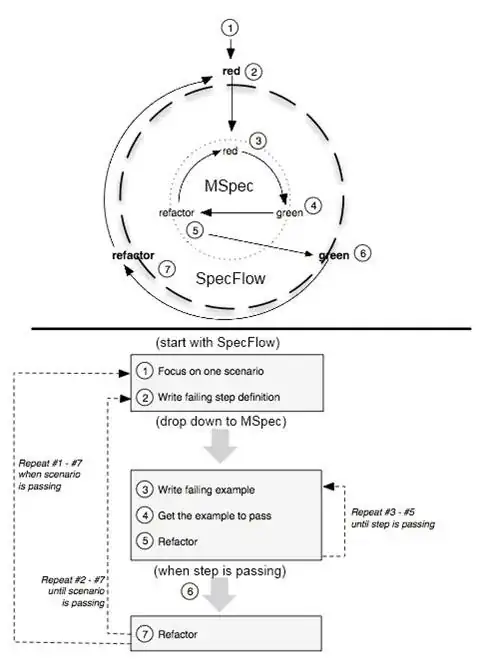

I suppose the answer I am looking for here, aside of the simpler yes/no to the question of "both", is an explanation/worked example of how I and a team of developers start out with a single n-tier scenario and end up with an implementation in an enterprise setting, where we've been able to break the tasks up into layers and share them amongst ourselves and work in parallel to turn the BDD scenario test from red to green, all the while ensuring that the the code we write is only written because the scenario calls for it, and at a fine grained level the code we have written is appropriately unit tested following cycles of TDD at the task and sub-task level as we go.

If, of course that is correct?