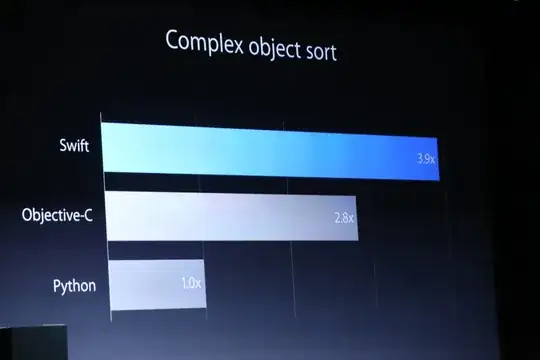

First, (IMO) comparing with Python is nearly meaningless. Only comparison with Objective-C is meaningful.

- How can a new programming language be so much faster?

Objective-C is a slow language. (Only C part is fast, but that's because it's C) It has never been extremely fast. It was just fast enough for their (Apple's) purpose, and faster then their older versions. And it was slow because...

- Do the Objective-C results from a bad compiler or is there something less efficient in Objective-C than Swift?

Objective-C guaranteed every method to be dynamically dispatched. No static dispatch at all. That made it impossible to optimize an Objective-C program further. Well, maybe JIT technology can be some help, but AFAIK, Apple really hate unpredictable performance characteristics and object lifetime. I don't think they had adopted any JIT stuff. Swift doesn't have such dynamic dispatch guarantee unless you put some special attribute for Objective-C compatibility.

- How would you explain a 40% performance increase? I understand that garbage collection/automated reference control might produce some additional overhead, but this much?

GC or RC doesn't matter here. Swift is also employing RC primarily. No GC is there, and also will not unless there's some huge architectural leap on GC technology. (IMO, it's forever) I believe Swift has a lot more room for static optimization. Especially low level encryption algorithms, as they usually rely on huge numeric calculations, and this is a huge win for statically dispatch languages.

Actually I was surprised because 40% seems too small. I expected far more. Anyway, this is the initial release, and I think optimization was not the primary concern. Swift is not even feature-complete! They will make it better.

Update

Some keep bugging me to argue that the GC technology is superior. Though stuff below can be arguable, and just my very biased opinion, but I think I have to say to avoid this unnecessary argument.

I know what conservative/tracing/generational/incremental/parallel/realtime GCs are and how they are different. I think most of readers also already know that. I also agree that GC is very nice in some field, and also shows high throughput in some cases.

Anyway, I suspect the claim of GC throughput is always better than RC. Most of overhead of RC comes from ref-counting operation and locking to protect ref-count number variable. And RC implementation usually provide a way to avoid counting operations. In Objective-C, there's __unsafe_unretained and in Swift, (though it's still somewhat unclear to me) unowned stuffs. If the ref-counting operation cost is not acceptable, you can try to opt-out them selectively by using the mechanics. Theoretically, we can simulate almost unique-ownership scenario by using non-retaining references very aggressively to avoid RC overhead. Also I expect the compiler can eliminate some obvious unnecessary RC operations automatically.

Unlike RC system, AFAIK, partial opt-out of reference-types is not an option on GC system.

I know there're many released graphics and games which are using GC based system, and also know most of them are suffering by lack of determinism. Not only for performance characteristic, but also object lifetime management. Unity is mostly written in C++, but the tiny C# part causes all the weird performance issues. HTML hybrid apps and still suffering by unpredictable spikes on any system. Used widely doesn't mean that's superior. It just means that's easy and popular to people who don't have many options.

Update 2

Again to avoid unnecessary argument or discussion, I add some more details.

@Asik provided an interesting opinion about GC spikes. That's we can regard value-type-everywhere approach as a way to opt-out GC stuff. This is pretty attractive, and even doable on some systems (purely functional approach for example). I agree that this is nice in theory. But in practice it has several issues. The biggest problem is partial application of this trick does not provide true spike-free characteristics.

Because latency issue is always all or nothing problem. If you have one frame spike for 10 seconds (= 600frames), then the whole system is obviously failing. This is not about how better or worse. It's just pass or fail. (or less then 0.0001%) Then where is the source of GC spike? That's bad distribution of GC load. And that's because the GC is fundamentally indeterministic. If you make any garbage, then it will activate the GC, and spike will happen eventually. Of course, in the ideal world where GC load will be always ideal, this won't happen, but I am living in real world rather than imaginary ideal world.

Then if you want to avoid spike, you have to remove all the ref-types from the whole system. But it's hard, insane, and even impossible due to unremovable part such as .NET core system and library. Just using non-GC system is far easier.

Unlike GC, RC is fundamentally deterministic, and you don't have to use this insane optimization (purely-value-type-only) just only to avoid spike. What you have to do is tracking down and optimizing the part which causes the spike. In RC systems, spike is local algorithm issue, but in GC systems, spikes are always global system issue.

I think my answer is gone too much off-topic, and mostly just repetition of existing discussions. If you really want to claim some superiority/inferiority/alternative or anything else of GC/RC stuffs, there're plenty of existing discussions in this site and StackOverflow, and you can continue to fight at there.