The "problem" boils down to how files are written out to the storage medium in a byte by byte fashion.

In it's most basic representation, a file is nothing more than a series of bytes written out to the disk (aka storage medium). So your original string looks like:

Address Value

0x00 `a`

0x01 `a`

0x02 `a`

0x03 `b`

0x04 `d`

0x05 `d`

0x06 `d`

And you want to insert C at position 0x04. That requires shifting bytes 4 - 6 down one byte so you can insert the new value. If you don't, you're going to over-write the value that's currently at 0x04 which is not what you want.

Address Value

0x00 `a`

0x01 `a`

0x02 `a`

0x03 `b`

0x04 `C`

0x05 `d`

0x06 `d`

0x07 `d`

So the reason why you have to re-write the tail of the file after you insert a new value is because there isn't any space within the file to accept the inserted value. Otherwise you would over-write what was there.

Addendum 1: If you wanted to replace the value of b with C then you do not need to re-write the tail of the string. Replacing a value with a like sized value doesn't require a rewrite.

Addendum 2: If you wanted to replace the string ab with C then you would need to re-write the rest of the file as you've created a gap in the file.

Addendum 3: Block level constructs were created to make handling large files easier to deal with. Instead of having to find 1M worth of contiguous space for your file, you now only need to find 1M worth of available blocks to write to instead.

In theory, you could construct a filesystem that did byte-by-byte linking similar to what blocks provide. Then you could insert a new byte by updating the to | from pointers at the appropriate point. I would hazard a guess that the performance on that would be pretty poor.

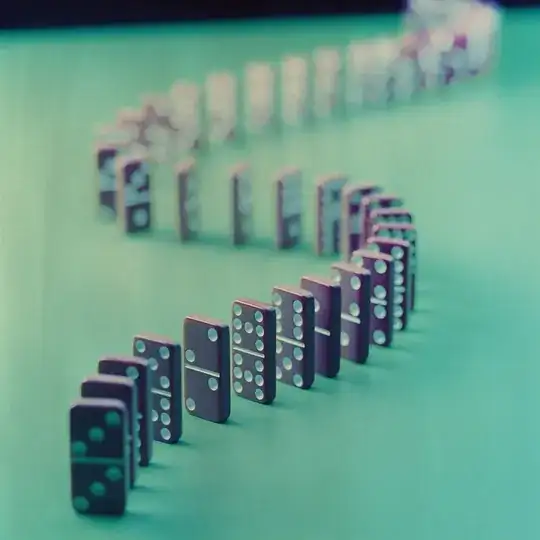

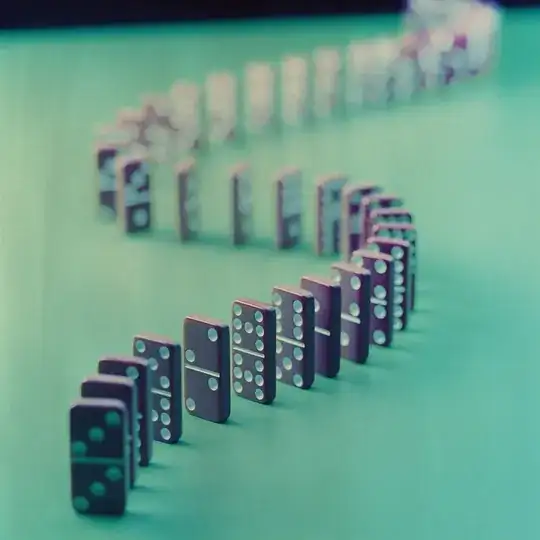

As Grandmaster B suggested, use a picture of stacked dominoes to visually understand how the file is represented.

You can't insert another domino within the line of dominoes without causing everything to tumble over. You have to create the space for the new domino by moving the others down the line. Moving dominoes down the line is the equivalent of re-writing the tail of the file after the insertion point.