Short introduction to this question. I have used now TDD and lately BDD for over one year now. I use techniques like mocking to make writing my tests more efficiently. Lately I have started a personal project to write a little money management program for myself. Since I had no legacy code it was the perfect project to start with TDD. Unfortunate I did not experience the joy of TDD so much. It even spoiled my fun so much that I have given up on the project.

What was the problem? Well, I have used the TDD like approach to let the tests / requirements evolve the design of the program. The problem was that over one half of the development time as for writing / refactor tests. So in the end I did not want to implement any more features because I would need to refactor and write to many test.

At work I have a lot of legacy code. Here I write more and more integration and acceptance tests and less unit tests. This does not seem to be a bad approach since bugs are mostly detected by the acceptance and integration tests.

My idea was, that I could in the end write more integration and acceptance tests than unit tests. Like I said for detecting bugs the unit tests are not better than integration / acceptance tests. Unit test are also good for the design. Since I used to write a lot of them my classes are always designed to be good testable. Additionally, the approach to let the tests / requirements guide the design leads in most cases to a better design. The last advantage of unit tests is that they are faster. I have written enough integration tests to know, that they can be nearly as fast as the unit tests.

After I was looking through the web I found out that there are very similar ideas to mine mentioned here and there. What do you think of this idea?

Edit

Responding to the questions one example where the design was good,but I needed a huge refactoring for the next requirement:

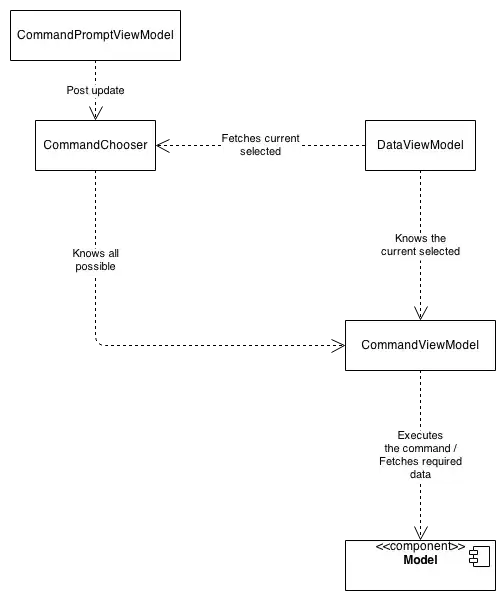

At first there were some requirements to execute certain commands. I wrote an extendable command parser - which parsed commands from some kind of command prompt and called the correct one on the model. The result were represented in a view model class:

There was nothing wrong here. All classes were independent from each other and the I could easily add new commands, show new data.

The next requirement was, that every command should have its own view representation - some kind of preview of the result of the command. I redesigned the program to achieve a better design for the new requirement:

This was also good because now every command has its own view model and therefore its own preview.

The thing is, that the command parser was changed to use a token based parsing of the commands and was stripped from its ability to execute the commands. Every command got its own view model and the data view model only knows the current command view model which than knows the data which has to be shown.

All I wanted to know at this point is, if the new design did not break any existing requirement. I did not have to change ANY of my acceptance test. I had to refactor or delete nearly EVERY unit tests, which was a huge pile of work.

What I wanted to show here is a common situation which happened often during the development. There were no problem with the old or the new designs, they just changed naturally with the requirements - how I understood it, this is one advantage of TDD, that the design evolves.

Conclusion

Thanks for all the answers and discussions. In summary of this discussion I have thought of an approach which I will test with my next project.

- First of all I write all tests before implementing anything like I always did.

- For requirements I write at first some acceptance tests which tests the whole program. Then I write some integration tests for the components where I need to implement the requirement. If there is a component which work closely together with another component to implement this requirement I would also write some integration tests where both components are tested together. Last but not least if I have to write an algorithm or any other class with a high permutation - e.g. a serializer - I would write unit tests for this particular classes. All other classes are not tested but any unit tests.

- For bugs the process can be simplified. Normally a bug is caused by one or two components. In this case I would write one integration test for the components which tests the bug. If it related to a algorithm I would only write a unit test. If it is not easy to detect the component where the bug occurs I would write an acceptance test to locate the bug - this should be an exception.