Lanier has invented a 50 cent word in an attempt to cast a net around a specific set of ideas that describe a computational model for creating computer programs having certain identifiable characteristics.

The word means:

A mechanism for component interaction that uses pattern recognition or

artificial cognition in place of function invocation or message

passing.

The idea comes largely from biology. Your eye interfaces with the world, not via a function like See(byte[] coneData), but through a surface called the retina. It's not a trivial distinction; a computer must scan all of the bytes in coneData one by one, whereas your brain processes all of those inputs simultaneously.

Lanier claims that the latter interface is more fault tolerant, which it is (a single slipped bit in coneData can break the whole system). He claims that it enables pattern matching and a host of other capabilities that are normally difficult for computers, which it does.

The quintessential "phenotropic" mechanism in a computer system would be the Artificial Neural Network (ANN). It takes a "surface" as input, rather than a defined Interface. There are other techniques for achieving some measure of pattern recognition, but the neural network is the one most closely aligned with biology. Making an ANN is easy; getting it to perform the task that you want it to perform reliably is difficult, for a number of reasons:

- What do the input and output "surfaces" look like? Are they stable, or do they vary in size over time?

- How do you get the network structure right?

- How do you train the network?

- How do you get adequate performance characteristics?

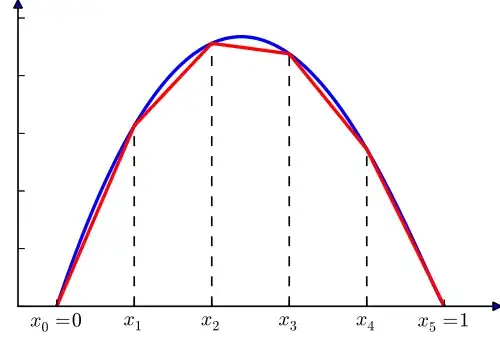

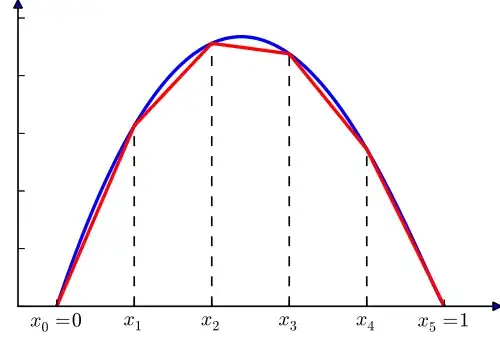

If you are willing to part with biology, you can dispense with the biological model (which attempts to simulate the operation of actual biological neurons) and build a network that is more closely allied with the actual "neurons" of a digital computer system (logic gates). These networks are called Adaptive Logic Networks (ALN). The way they work is by creating a series of linear functions that approximate a curve. The process looks something like this:

... where the X axis represents some input to the ALN, and the Y axis represents some output. Now imagine the number of linear functions expanding as needed to improve the accuracy, and imagine that process occurring across n arbitrary dimensions, implemented entirely with AND and OR logic gates, and you have some sense of what an ALN looks like.

ALNs have certain, very interesting characteristics:

- They are fairly easily trainable,

- They are very predictable, i.e. slight changes in input do not produce wild swings in output,

- They are lightning fast, because they are built in the shape of a logic tree, and operate much like a binary search.

- Their internal architecture evolves naturally as a result of the training set

So a phenotropic program would look something like this; it would have a "surface" for input, a predictable architecture and behavior, and it would be tolerant of noisy inputs.

Further Reading

An Introduction to Adaptive Logic Networks

With an Application to

Audit Risk Assessment

"Object Oriented" vs "Message Oriented," by Alan Kay