It all depends on what you consider to be the "transmission time". If you take into account the time elapsed between the calls to send() and recv(), this depends on several factors, and packet size might not be so relevant to the calculation. In that case you could, in some (but I repeat, not all!) cases say that whatever you do - TCP, UDP, big or small packets - little changes.

Otherwise, it actually could be beneficial in some scenarios to have smaller packets in order to decrease total transmission time. For example: if you have a high error probability, and you need to retransmit, and you use very large packets, then very soon the error retransmission time begins to dominate the calculation. Increasing packet size will increase the cost and probability of an error, thus increasing the transmission time. In the extreme case of a single packet, the probability of that one packet to be damaged approaches unity, and you may need to retransmit one thousand times. Time increase is now 100000%. With packets one thousand times smaller, ten of them are damaged, you retransmit only those, and overall time is increased by as little as one percent (depending on retransmission protocol).

You also have to consider the overhead. Every packet of B payload bytes you send will have its O bytes of overhead. If we simplify and assume that packet physical transmission time is a linear function of its size (it is not), to transmit N bytes you need to transmit N/B frames, which means (N/B)*(B+O) = N(1 + O/B) bytes, and kN(1+O/B) transmission time. As you can see, the smaller is B, the longer the time. A hypothetical packet of size zero would mean no payload data transmission at all, and therefore infinite time, the exact opposite of the above scenario.

Only at the most abstract level - no overheads and constant-time transmission - yes, transmission time is k(N/B)*B, which is kN and does not depend on B. And, again yes, the first "accident" this model encounters is probably a discontinuity on hitting MTU size.

In general, however, that is not a faithful representation of what really is taking place (think overhead. Think errors. Think lower-layer overheads, plural... and this maybe on a route of several hops), and packet size might matter a lot.

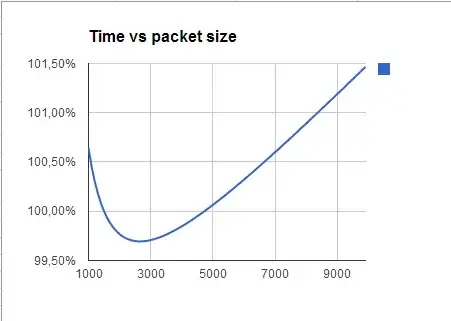

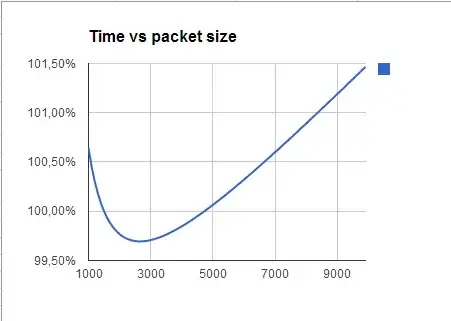

Often, you will have to experiment in order to find the actual best value, because simple heuristics ("the smaller the better", "the bigger the better", etc.) will not yield the best possible result.

A simulated test

I'm here on a XP laptop, so netstat -snap tcp gives me some data to work with re. error rate. I haven't statistics on packet sizes, so I'm assuming they're all around 1500 bytes even if of course they aren't.

I assume that my stats give actual error rate and that a packet is dead if a byte is dead, so that error probability at byte level, Pb, is related to packet error probability Pp by the relation "for a packet to succeed, all bytes must come through intact", i.e.,

1 - Pp = (1 - Pb)^PacketSize

This lets me derive Pb as 1-exp(ln(1 - Pp)/PacketSize). At that point I can recalculate the packet error probability for any other PacketSize, and from that, determine how long it will take to transmit the same payload.

In theory I could claim being right, for it turns out that size matters. But that's nitpicking - I was actually wrong, for in this scenario at least, size matters very very little:

So, packet size may still influence application design, but certainly not because of data transmission times.