Tail call optimization is present in many languages and compilers. In this situation, the compiler recognizes a function of the form:

int foo(n) {

...

return bar(n);

}

Here, the language is able to recognize that the result being returned is the result from another function and change a function call with a new stack frame into a jump.

Realize that the classic factorial method:

int factorial(n) {

if(n == 0) return 1;

if(n == 1) return 1;

return n * factorial(n - 1);

}

is not tail call optimizatable because of the inspection necessary on the return. (Example source code and compiled output)

To make this tail call optimizeable,

int _fact(int n, int acc) {

if(n == 1) return acc;

return _fact(n - 1, acc * n);

}

int factorial(int n) {

if(n == 0) return 1;

return _fact(n, 1);

}

Compiling this code with gcc -O2 -S fact.c (the -O2 is necessary to enable the optimization in the compiler, but with more optimizations of -O3 it gets hard for a human to read...)

_fact(int, int):

cmpl $1, %edi

movl %esi, %eax

je .L2

.L3:

imull %edi, %eax

subl $1, %edi

cmpl $1, %edi

jne .L3

.L2:

rep ret

(Example source code and compiled output)

One can see in segment .L3, the jne rather than a call (which does a subroutine call with a new stack frame).

Please note this was done with C. Tail call optimization in Java is hard and depends on the JVM implementation (that said, I haven't seen any that do it, because it is hard and implications of the required Java security model requiring stack frames - which is what TCO avoids) -- tail-recursion + java and tail-recursion + optimization are good tag sets to browse. You may find other JVM languages are able to optimize tail recursion better (try clojure (which requires the recur to tail call optimize), or scala).

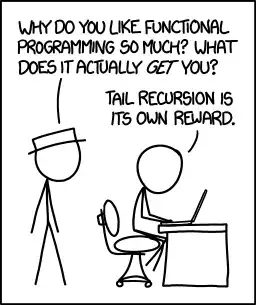

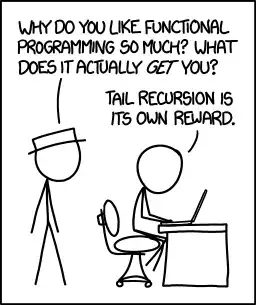

That said,

There is a certain joy in knowing that you wrote something right - in the ideal way that it can be done.

And now, I'm going to get some scotch and put on some German electronica...

To the general question of "methods to avoid a stack overflow in a recursive algorithm"...

Another approach is to include a recursion counter. This is more for detecting infinite loops caused by situations beyond one's control (and poor coding).

The recursion counter takes the form of

int foo(arg, counter) {

if(counter > RECURSION_MAX) { return -1; }

...

return foo(arg, counter + 1);

}

Each time you make a call, you increment the counter. If the counter gets too big, you error out (in here, just a return of -1, though in other languages you may prefer to throw an exception). The idea is to prevent worse things from happening (out of memory errors) when doing a recursion that is much deeper than expected and likely an infinite loop.

In theory, you shouldn't need this. In practice, I've seen poorly written code that has hit this because of a plethora of small errors and bad coding practices (multithreaded concurrency issues where something changes something outside the method that makes another thread go into an infinite loop of recursive calls).

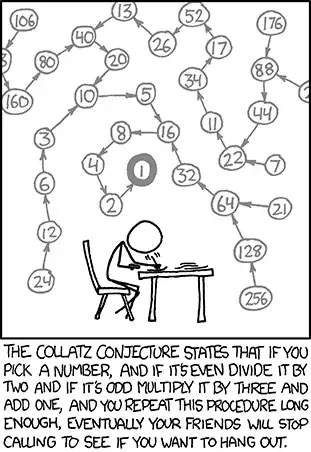

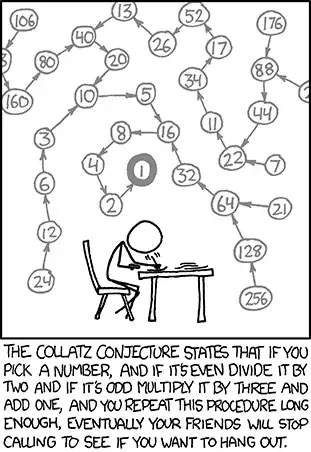

Use the right algorithm and solve the right problem. Specifically for the Collatz Conjecture, it appears that you are trying to solve it in the xkcd way:

You are starting at a number and doing a tree traversal. This rapidly leads to a very large search space. A quick run to calculate the number of iterations for the correct answer results in about 500 steps. This shouldn't be an issue for recursion with a small stack frame.

While knowing the recursive solution is not a bad thing, one should also realize that many times the iterative solution is better. A number of ways of approaching converting a recursive algorithm to an iterative one can be seen on Stack Overflow at Way to go from recursion to iteration.