Is memory management in programming becoming an irrelevant concern?

Memory management (or control) is actually the primary reason I'm using C and C++.

Memory is comparatively cheap now.

Not fast memory. We're still looking at a small number of registers, something like 32KB data cache for L1 on i7, 256KB for L2, and 2MB for L3/core. That said:

If we do not speak in terms of target platforms with strict limits on

working memory (i.e. embedded systems and the like), should memory

usage be a concern when picking a general purpose language today?

Memory usage on a general level, maybe not. I'm a little bit impractical in that I don't like the idea of a notepad that takes, say, 50 megabytes of DRAM and hundreds of megabytes of hard disk space, even though I have that to spare and abundant more. I've been around for a long time and it just feels weird and kind of icky to me to see such a simple application take relatively so much memory for what should be doable with kilobytes. That said, I might be able to live with myself if I encountered such a thing if it was still nice and responsive.

The reason memory management is important to me in my field is not to reduce memory usage so much in general. Hundreds of megabytes of memory use won't necessarily slow an application down in any non-trivial way if none of that memory is frequently accessed (ex: only upon a button click or some other form of user input, which is extremely infrequent unless you are talking about Korean Starcraft players who might click a button a million times a second).

The reason it's important in my field is to get memory tight and close together that is very frequently accessed (ex: being looped over every single frame) in those critical paths. We don't want to have a cache miss every time we access just one out of a million elements that need to all be accessed in a loop every single frame. When we move memory down the hierarchy from slow memory to fast memory in large chunks, say 64 byte cache lines, it's really helpful if those 64 bytes all contain relevant data, if we can fit multiple elements worth of data into those 64 bytes, and if our access patterns are such that we use it all before the data is evicted.

That frequently-accessed data for the million elements might only span 20 megabytes even though we have gigabytes. It still makes a world of difference in frame rates looping over that data every single frame drawn if the memory is tight and close together to minimize cache misses, and that's where the memory management/control is so useful. Simple visual example on a sphere with a few million vertices:

The above is actually slower than my mutable version since it's testing a persistent data structure representation of a mesh, but with that aside, I used to struggle to achieve such frame rates even on half that data (admittedly the hardware has gotten faster since my struggles) because I didn't get the hang of minimizing cache misses and memory use for mesh data. Meshes are some of the trickiest data structures I've dealt with in this regard because they store so much interdependent data that has to stay in sync like polygons, edges, vertices, as many texture maps as the user wants to attach, bone weights, color maps, selection sets, morph targets, edge weights, polygon materials, etc. etc. etc..

I've designed and implemented a number of mesh systems in the past couple of decades and their speed was often very proportional to their memory use. Even though I'm working with so, so much more memory than when I started, my new mesh systems are over 10 times faster than my first design (almost 20 years ago) and to a large degree because they use around 1/10th of the memory. The newest version even uses indexed compression to cram as much data as possible, and in spite of the processing overhead of the decompression, the compression actually improved performance because, again, we have so little precious fast memory. I can now fit a million polygon mesh with texture coordinates, edge creasing, material assignments, etc. along with an spatial index for it in about 30 megabytes. My oldest version used several hundred megabytes and even testing my oldest one today on my i7, it's many, many times slower, and that several hundreds MB of mem use didn't even include a spatial index.

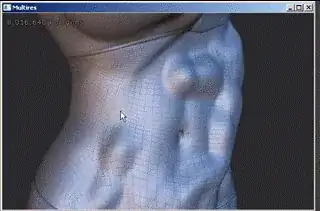

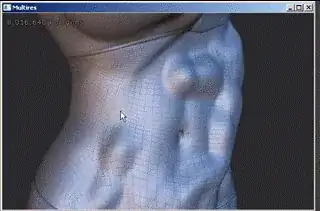

Here's the mutable prototype with over 8 million quadrangles and a multires subdivision scheme on an i3 with a GF 8400 (this was from some years ago). It's faster than my immutable version but not used in production since I've found the immutable version so much easier to maintain and the performance hit isn't too bad. Note that the wireframe does not indicate facets, but patches (the wires are actually curves, otherwise the entire mesh would be solid black), although all the points in a facet are modified by the brush.

So anyway, I just wanted to show some of this above to show some concrete examples and areas where memory management is so helpful and also hopefully so people don't think I'm just talking out of my butt. I tend to get a little bit irritated when people say memory is so abundant and cheap, because that's talking about slow memory like DRAM and hard drives. It's still so small and so precious when we're talking about fast memory, and performance for genuinely critical (i.e., common case, not for everything) paths relates to playing to that small amount of fast memory and utilizing it as effectively as we can.

For this kind of thing it is really helpful to work with a language that allows you to design high-level objects like C++, for example, while still being able to store these objects in one or more contiguous arrays with the guarantee that the memory of all such objects will be contiguously represented and without any unneeded memory overhead per object (ex: not all objects need reflection or virtual dispatch). When you actually move into those performance-critical areas, it actually becomes a productivity boost to have such memory control over, say, fiddling with object pools and using primitive data types to avoid object overhead, GC costs, and to keep memory frequently-accessed together contiguous.

So memory management/control (or lack thereof) is actually a dominating reason in my case for choosing what language most productively allows me to tackle problems. I do definitely write my share of code which isn't performance-critical, and for that I tend to use Lua which is pretty easy to embed from C.