[edit#2] If anyone from VMWare can hit me up with a copy of VMWare Fusion, I'd be more than happy to do the same as a VirtualBox vs VMWare comparison. Somehow I suspect the VMWare hypervisor will be better tuned for hyperthreading (see my answer too)

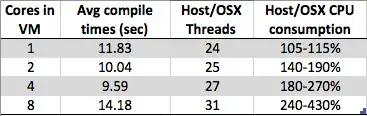

I'm seeing something curious. As I increase the number of cores on my Windows 7 x64 virtual machine, the overall compile time increases instead of decreasing. Compiling is usually very well suited for parallel processing as in the middle part (post dependency mapping) you can simply call a compiler instance on each of your .c/.cpp/.cs/whatever file to build partial objects for the linker to take over. So I would have imagined that compiling would actually scale very well with # of cores.

But what I'm seeing is:

- 8 cores: 1.89 sec

- 4 cores: 1.33 sec

- 2 cores: 1.24 sec

- 1 core: 1.15 sec

Is this simply a design artifact due to a particular vendor's hypervisor implementation (type2:virtualbox in my case) or something more pervasive across more VMs to make hypervisor implementations more simpler? With so many factors, I seem to be able to make arguments both for and against this behavior - so if someone knows more about this than me, I'd be curious to read your answer.

Thanks Sid

[edit:addressing comments]

@MartinBeckett: Cold compiles were discarded.

@MonsterTruck: Couldn't find an opensource project to compile directly. Would be great but can't screwup my dev env right now.

@Mr Lister, @philosodad: Have 8 hw threads, using VirtualBox, so should be 1:1 mapping without emulation

@Thorbjorn: I have 6.5GB for the VM and a smallish VS2012 project - it's quite unlikely that I'm swapping in/out trashing the page file.

@All: If someone can point to an open source VS2010/VS2012 project, that might be a better community reference than my (proprietary) VS2012 project. Orchard and DNN seem to need environment tweaking to compile in VS2012. I really would like to see if someone with VMWare Fusion also sees this (for VMWare vs VirtualBox compartmentalization)

Test details:

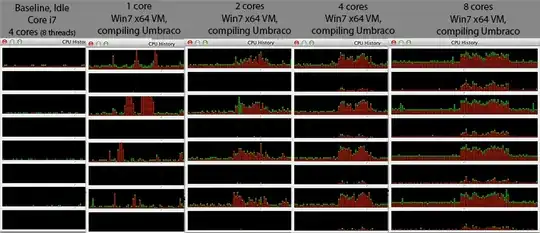

- Hardware: Macbook Pro Retina

- CPU : Core i7 @ 2.3Ghz (quad core, hyper threaded = 8 cores in windows task manager)

- Memory : 16 GB

- Disk : 256GB SSD

- Host OS: Mac OS X 10.8

- VM type: VirtualBox 4.1.18 (type 2 hypervisor)

- Guest OS: Windows 7 x64 SP1

- Compiler: VS2012 compiling a solution with 3 C# Azure projects

- Compile times measure by VS2012 plugin called 'VSCommands'

- All tests run 5 times, first 2 runs discarded, last 3 averaged