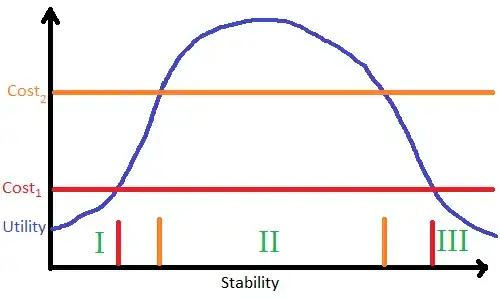

Unless you are going to write code without testing it, you are always going to incur the cost of testing.

The difference between having unit tests and not having them is the difference between the cost of writing the test and the cost of running it compared to the cost of testing by hand.

If the cost of writing a unit test is 2 minutes and the cost of running the unit test is practically 0, but the cost of manually testing the code is 1 minute, then you break even when you have run the test twice.

For many years I was under the misapprehension that I didn't have enough time to write unit tests for my code. When I did write tests, they were bloated, heavy things which only encouraged me to think that I should only ever write unit tests when I knew they were needed.

Recently I've been encouraged to use Test Driven Development and I found it to be a complete revelation. I'm now firmly convinced that I don't have the time not to write unit-tests.

In my experience, by developing with testing in mind you end up with cleaner interfaces, more focussed classes & modules and generally more SOLID, testable code.

Every time I work with legacy code which doesn't have unit tests and I have to manually test something, I keep thinking "this would be so much quicker if this code already had unit tests". Every time I have to try and add unit test functionality to code with high coupling, I keep thinking "this would be so much easier if it had been written in a de-coupled way".

Comparing and contrasting the two experimental stations that I support. One has been around for a while and has a great deal of legacy code, while the other is relatively new.

When adding functionality to the old lab, it is often a case of getting down to the lab and spending many hours working through the implications of the functionality they need and how I can add that functionality without affecting any of the other functionality. The code is simply not set up to allow off-line testing, so pretty much everything has to be developed on-line. If I did try to develop off-line then I would end up with more mock objects than would be reasonable.

In the newer lab, I can usually add functionality by developing it off-line at my desk, mocking out only those things which are immediately required, and then only spending a short time in the lab, ironing out any remaining problems not picked up off-line.

TL;DR version:

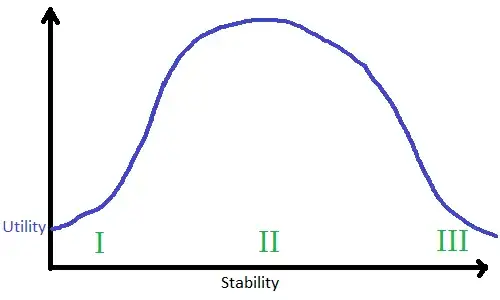

Write a test when the cost of writing the test, plus the cost of running it as many times as you need to is likely to be less than the cost of manually testing it as many times as you need to.

Remember though that if you use TDD, the cost of writing tests is likely to come down as you get better at it, and unless the code is absolutely trivial, you probably end up running your tests more often than you expect.