According to this link ( and some other sources I have found ) more bandwidth on a wire means more bit rates per second...

Can someone try to explain why this is so as simple as possible?

This is all I know so far ( that I was able to learn by myself: )

-> Data is sent through a wire using frequency modulation, meaning, the voltage on the wire changes over time. At the end node, this signal is decoded.

-> A voltage in a specific frequency can be sent over a wire. ( Like a voltage change with a frequency of 5 Hz. ) That will be a sinusoidal signal.

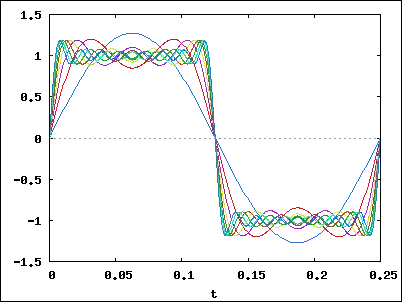

-> To represent a data ( something like 10111001 ), you need to mix up a number of voltages in different frequencies so that it looks something more like:

My exact questions are:

-> Can this be done with any frequencies that is chosen? For example, can I achieve the desired signal with using frequencies between 10 - 20 Herz, OR 50 to 60 Herz? Or do I need specific frequencies?

-> How does the bandwidth ( the frequency range I can use ) affect, how fast the signal goes on the wire? ( the bit rate? )

I am all trying to learn this stuff by myself with not much of an Electrical Engineering background, so if you can try to keep it simple, it would be very helpful.

Also any advise on background info that I require first is much appreciated.