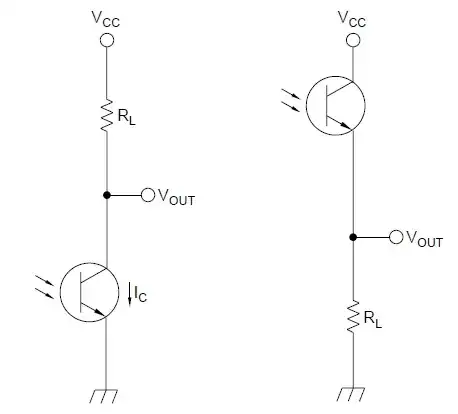

I have an IR detection circuit that consists of a phototransistor and a resistor in a voltage divider configuration, like the right circuit here:

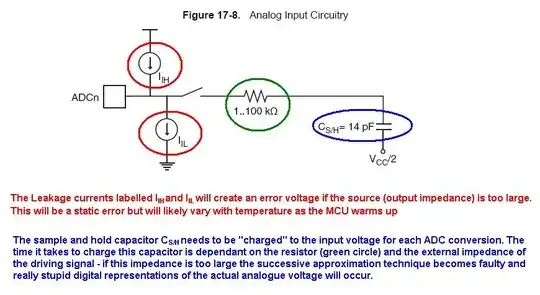

In my case, R_L = 1 MOhm since I want low current consumption. I connect V_out to an ADC pin of my ATtiny85 MCU. Now for my question: The ATtiny85 datasheet says that "The ADC is optimized for analog signals with an output impedance of approximately 10 kOhm or less."

The problem is that I don't understand what "output impedance" is when we're talking about an ADC. Does my circuit as above have an output impedance of 10 kOhm or less? And why is it called impedance, I thought that term is only used for AC signals? In my case I just want to read a voltage a few times per second at most to measure the strength of the incoming infrared light.