I have a product using the MSP430 microprocessor, which has been selling for a couple years now. One of the MSP430's jobs is to communicate over async serial to a low-power radio.

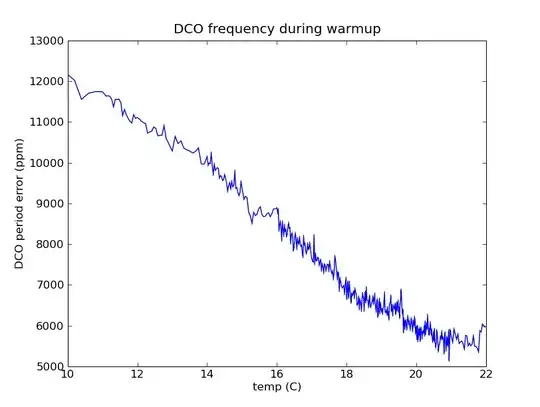

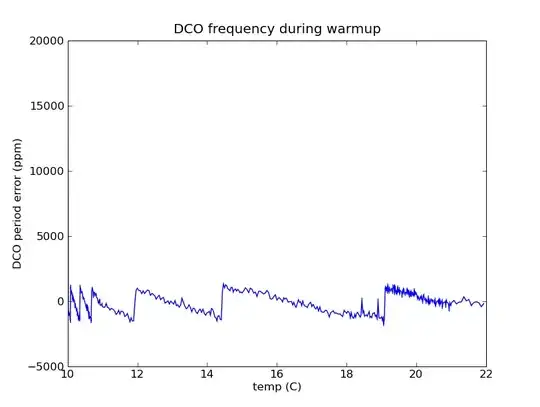

With the onset of this winter, there has been an unacceptable failure rate (several percent) in cold temperature. Investigation has found that the serial communication with the radio is failing. The baudrate generator for the serial port is fed by SMCLK, which is divided from the MSP430's digitally-controlled oscillator (DCO).

Why is serial communication failing at low temperatures?

(Note: I have already solved the problem and will be posting the answer soon. Hint: it was a software bug.)