In the following article on overclocking a Pentium from 1GHz to 5GHz using liquid nitrogen: The 5GHz Project, there is an assertion that "Heat dissipation rises exponentially during extreme overclocking". However, in this post on CPU power heat: How are the CPU power and temperature caculated/estimated?, it appears that work is linearly proportional to frequency, as follows:

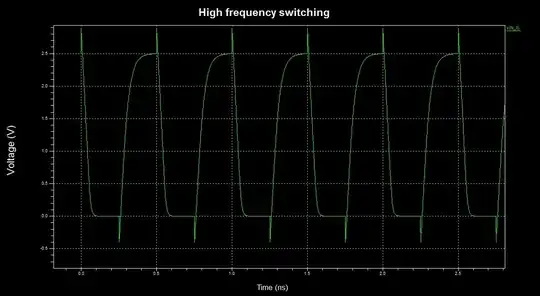

To work out the work done whenever the gate changes state you can model it as a capacitor with some effective capacitance, \$C_g\$, and you get:

$$W = \frac{1}{2}C_gV^2$$

and the power is the work per state change times the number of state changes per second, so:

$$P_g \propto C_gV^2f$$

If you add up all the logic gates in the processor you can define an effective total capacitance, \$C\$, that will be the sum of all the gate capacitances, \$C_g\$, so:

$$P \propto CV^2f$$

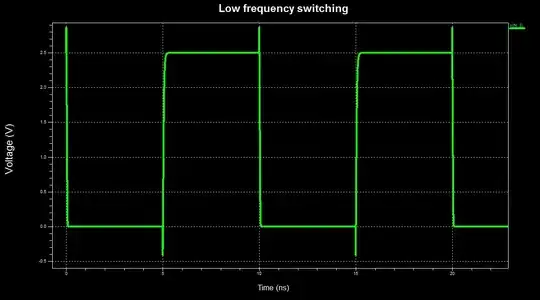

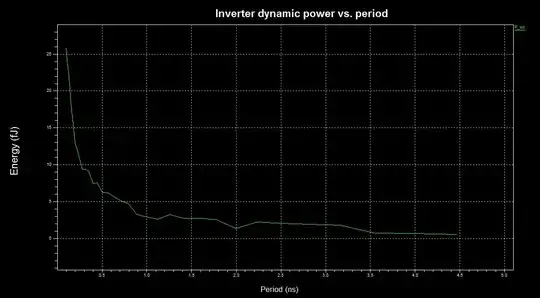

The 5GHz project page then states "In the past we recorded about 135 watts using the Chip-con compressor at 4.1 GHz. Using our nitrogen cooling to break the 5 GHz sound barrier would produce peak heat dissipation of up to 180 watts emitted from a die surface area of 1.12 square centimeters. Applied to our example that means 1.6 MW per square meter."

I asked the above question in the context of the question How are the CPU power and temperature caculated/estimated?. My follow-on question was deleted but I got this reply before it was deleted:

The formula only suggests a linear increase with respect to frequency if the voltage is held constant, which is not going to be true for large overclocks such as this one. The CPU becomes unstable at high frequencies, which can be partially compensated for by increasing the voltage. Thus, for large overclocks, the power grows faster than linearly because of this accompanying voltage contribution. I don't think it's literally exponential, however; that may be just imprecise language. The details would probably be better left to another SE site.

Probably the 5GHz people meant to say "increases quadratically", assuming that as they increase frequency, voltage is also increased proportionally to level necessary for chip to function reliably. What is the equation relating frequency and required voltage level for a chip which operates stably at frequency F and voltage V? Note that for GPU such as AMD HD7850, I can set the clock frequency without changing the voltage supplied to the chip, so it is not automatically the case that changing the frequency implies a change in supplied chip voltage. Clearly at some point my chip will stop functioning properly, so it would be helpful to have an equation showing how much to increase voltage as a function of frequency. Also note that some GPU users undervolt the chip, why would they do this?

Under the assumption of quadratic heat rise in frequency/voltage, it would be more efficient to have 5 processors running 1GHz rather than 1 processor running at 5GHz, is this correct? I.e. space is quadratically cheaper than time, is that more or less correct? How to correctly state this tradeoff?

In this response to a question on How Modern Overclocking Works, @Turbo J says

You can increase the clock frequency further when the voltage is higher - but at the price of massive additional generated heat. And the silicon will "wear out" faster, as bad things like Electromigration will increase too.

So again, the question is what is the equation that models "massive additional heat", is "massive" quadratic, i.e. are we still talking about the work equation above?