Plant leakage is a well known effect - The system of cables and connectors in distribution systems is known as "plant" for some reason - and causes signal to leak out and be received via antennas.

In an ideal coax, the wave travels between the conductors and imposes local current eddies and voltage nodes along the length of the transmission line, but most notably on the inner surface of the outer conductor and on the outer surface of the inner conductor (the skin effect). Again ideally, no signals that travel on the outside of the outer conductor can interact with the signals on the inner surface of the outer conductor.

Plant leakage arises from the divergence from this ideal, when you use wound or wrapped coax, poor connector termination and also mismatched termination loads.

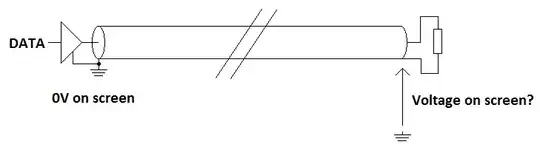

In your drawing above, assuming the termination also is properly shielded, the return signal should travel along the inner surface of the outer connector if the system is ideal. So you shouldn't actually see any radiation. Evidence of this is how non-coaxial waveguides (TEM- mode) work - everything flows along the inner surface and there is very low leakage.

If this is beyond a hypothetical situation and you are seeing leakage, it can arise from the quality of the coax, if it is solid foil or braided, and that depends on the frequency you're driving it at too (wavelength vs. mesh size). Connector shield continuity, termination matching all are radiation points. Also, the attached eqt. on the receiving end may inject a current mode return signal into the grounded shield causing the whole outer surface to act as an antenna and if this is a signal derived from the feed signal (amplifier power rail bounce as one example) then it might appear that the coax is leaking.

In general cable television plant leakage arises because of the less expensive cables they use (they have many many miles/KM installed cost really matters), the difficulty in maintaining a connector over many years and damage, nicks and knicks etc.

There are some systems that use gas dielectric and solid conductors that transport 10's of MW to antennae with almost no leakage so ideal performance is approachable.