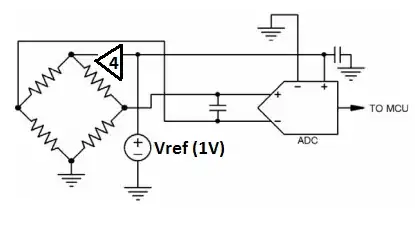

I have a variety of bridge transducers (pressure, strain gage) with sensitivity of 1mV/V. Also, most 24-bit ADCs and analog frontend chips have a PGA with programmable gain between 1-128. This means that at the highest gain setting, the maximum output is only 12% of full-range. In other words, the ENOB of the ADC reading is decreased by 3 just as a result of insufficient gain.

Recently I noticed that some ADCs allow a reference voltage significantly less than the excitation voltage of my sensors. Is it feasible to, for example, reduce the reference voltage and then use a non-inverting op-amp with 4x gain to supply the transducer excitation voltage? As far as I can tell, I will still have all the benefits of the ratiometric connection, with only slight degradation in noise performance (due to the ADC performance with reduced Vref... the closed-loop output resistance of the op-amp and its input voltage and current offsets appear to be negligible in this scenario) in terms of noise bits -- but the increased resolution more than compensates for the increase in noisy bits. As a bonus, this can separate the excitation voltage for each channel, so that e.g. a short circuit in one transducer won't interfere with excitation of the others.

I believe I can get very good gain accuracy by using a chip bussed resistor for the feedback -- this way the feedback resistors will be exceptionally well matched to each other and also be thermally-linked, so the feedback won't vary with temperature either.

If this works, any ideas why this arrangement doesn't commonly appear in example circuits?

Diagrams modified from http://www.ti.com/lit/ml/slyp163/slyp163.pdf

The "usual" circuit:

Proposed circuit:

Where the amplifier block looks like (pay no attention to the op-amp model number, it's what CircuitLab supports)

simulate this circuit – Schematic created using CircuitLab