For a more brief answer -- effectively the pulse triggers a sweep. So it has to trigger each and every time. Which also keeps the timing consistent from line to line, and field to field.

I can't speak for literally all TVs of course, but typically (meaning, probably 99%+ of TV sets sold say from 1950 to 1980?), they used a free-running relaxation oscillator (typically a blocking oscillator) to set H and V sweeps.

The sync signal is added to the oscillator's feedback signal. When a sync pulse arrives, and if the oscillator was close to threshold already, it gets pushed over threshold, triggering a cycle. When this happens on the next cycle, and so on, the oscillator is said to be locked to the sync pulse.

If the sync pulse isn't timed correctly (arrives too soon or too late to trigger a cycle), or is completely absent, the oscillator doesn't lock, and continues to oscillate at its free-running frequency (or at some weird ratio) -- thus a picture is always displayed, though it might be skewed, or "rolling", or nothing but static.

As a general design principle, if there's no reason not to, it is better to leave a system running, than to try and shut it off when not otherwise needed. In this case, shutting off sweep when no signal is available, would shut off the CRT entirely -- no V sweep means no height to the picture, and no H sweep means no width to the picture, or high voltage to light the tube at all (high voltage was derived from the horizontal deflection system). A blank tube would give the user no indication that the set is operational. It would also cause a huge shift in performance when a signal is received again: the picture might take some seconds to stabilize in size, intensity and sync, as components return to normal operating conditions. So it's more beneficial overall, anyway, to leave it free-running like this. (Besides, turning it off would've required additional logic -- more components, more cost!)

Note that the oscillator's frequency can rise, not fall: sync pushes it closer to threshold, not away from it. (It's a bit of both really, but the low duty cycle of the sync pulse means it's mainly in the speed-up direction.) For those of us with keen hearing, the change in pitch was perceptible -- the 15kHz whine would drift down (and become somewhat erratic) when tuned to static, but stabilize to a pure tone when tuned in. The operation of a muted TV was evident from one or two rooms away (sometimes to the confusion or consternation of adults in the room who had lost that range of hearing!).

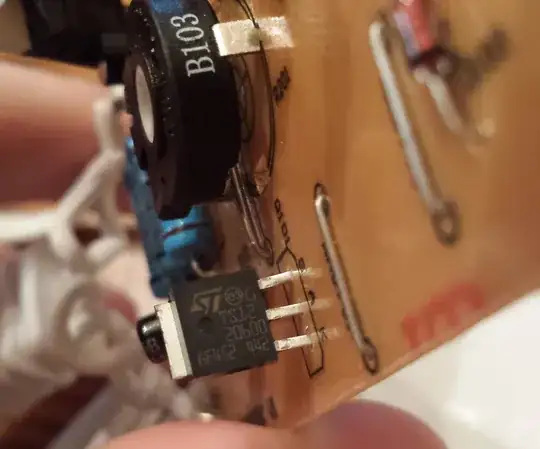

The other thing you need to know about relaxation oscillators: they aren't very stable. While there was one stable timing element used in color TVs (colorburst crystal), it was a matter of necessity; TVs are all about cost savings (indeed, from where we get the term "Muntzing"), and a relaxation oscillator is cheap as beans -- just one tube/transistor, a coil, and a few resistors and capacitors. The RC (or L/R) time constant has rather modest tolerances -- both in initial accuracy (10% tolerance components might be used!), and in overall stability (frequency variance probably > 0.1% during a vertical sweep?). There's no way such an oscillator will stay in sync at a perfect 262/263* lines/field. Maybe you could get away with sync every other line, or perhaps even every 10th line -- but certainly not once every field.

*Scan rate was actually a constant 262.5 lines. This has to do with how interlace was implemented. It doesn't need any special hardware in the TV set: the lines are simply drawn at whatever phase with respect to vertical sync. With an alternate ± half-line delay written into VSYNC, a full interlaced picture is drawn, no overlap, no gap!

There also may've been enough variation in program material, that a more inflexible (read: crystal controlled) sweep would've been impractical. For example, portable cameras would have to be at least as accurate; VCRs would need much greater precision in their drivetrain; early computer displays and game consoles would've needed much more precise frame rates; etc. It's a burden to many sources, without saving any bandwidth (nothing can (well, should) be drawn to the screen during retrace, why not use it for timing/framing signals instead?).

Incidentally, modern LCDs with analog input do have this issue, and tackle it well. That is, the issue of timing at a precision of one in hundreds, or thousands even. Consider a VGA signal at 1024x768: a thousand pixels must be drawn per line, plus the retrace period, for a total of about 1300 pixel clock cycles per HSYNC pulse. The LCD is a fundamentally digital system, and the RGB signals must be sampled by an ADC at exactly the pixel clock, to recover the data sent by the video card. Typically these monitors are programmed with tables of standard scan rates, and also provide a user adjustment to tweak pixel phase and frequency. When tweaking the phase, you can clearly see when pixels become fuzzy and indeterminate -- the ADC is sampling on an edge between colors.

The precision timing is done through a combination of crystal control (crystals are much cheaper now than they used to be!), PLL and NCO/DDS techniques, and carefully designed, high performance, RC (or perhaps even LC) oscillators embedded on-chip, which are controlled by the PLL, and onboard CPU, to lock onto the HSYNC signal with less than a pixel worth of timing error across the screen.