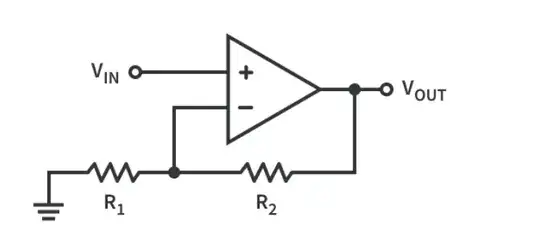

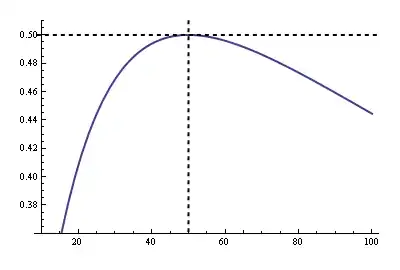

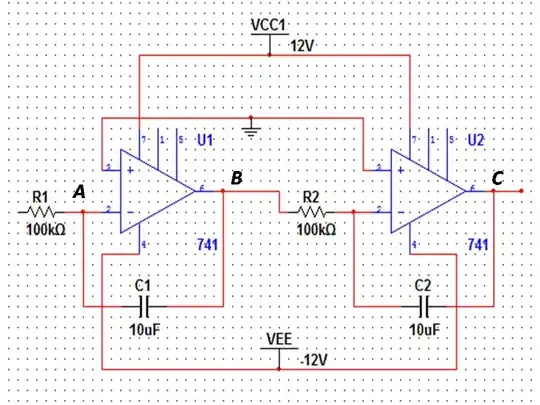

I'm new to op-amp (and circuits in general) and I'm trying to amplify a voltage signal with a simple op-amp circuit such as this one. However, as I increase the gain (increase R2 and/or decrease R1), the signal starts to level shift upwards until it hits the upper rail and I'm no longer able to see any of the gained oscillation. The shift is gradual, but the signal hits the 5V rail (becoming just a straight line) once the gain is around 1000. Could someone explain what may be causing this and possible solutions? Everything online just refers to the basic equations (A = 1+r2/r1. A = -r2/r1, etc), but nothing explaining a "Vout = A*Vin + shift" effect.

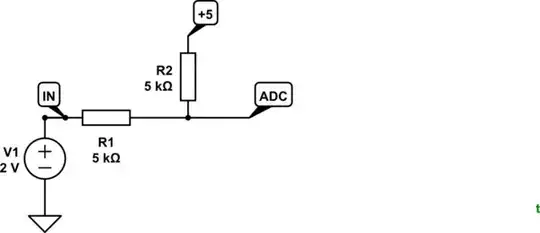

Some more details:

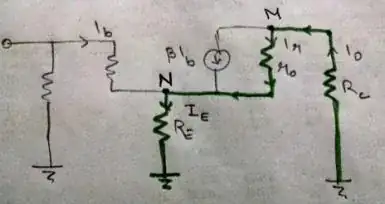

I'm using MCP6021 RRIO op-amp with +/-5V rails. The original signal is centered at 0. To clarify, I'm not referring to phase offset, but an offset of the amplitude center of the signal. I'm trying both non-inverting and inverting, and ran into the same problem. The circuit I'm testing with is non-inverting, wired exactly same as the example in the screenshot. The other screenshots are of the oscilloscope with no gain, 10 gain, 100 gain, and 1000 gain respectively.