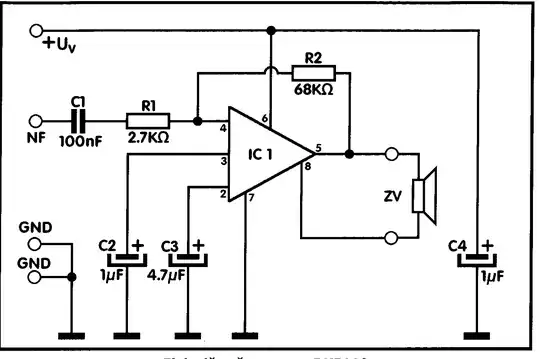

It is a vast subject to discuss but, basically, with voltage-mode control, the error voltage delivered by the compensator directly sets the duty ratio \$D\$. By doing so, you adjust the output power delivered by your converter, according to its dc transfer characteristic like \$V_{out}=DV_{in}\$ for a buck converter:

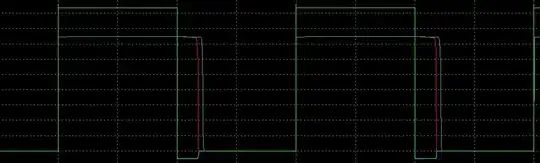

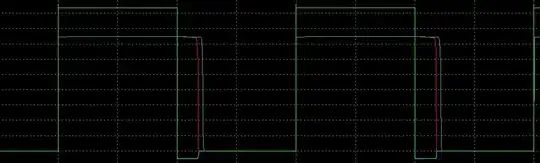

The duty ratio is elaborated via a pulse-width modulator (PWM) block made of a comparator and an artificial ramp pulsing from 0 to a peak value \$V_p\$. When the ramp intersects with the error voltage (a flat dc level in theory), then toggling occurs, turning the main transistor off. By changing the dc error voltage - the loop does that by monitoring the deviation of \$V_{out}\$ from its target - you directly adjust the duty ratio and ensures regulation. In this mode, you don't need to consider the inductive current \$i_L(t)\$ to operate the converter. You actually implement a current limit but for safety reasons and not for regulation purposes.

In current-mode control, it is different. The sawtooth is replaced by the inductor current which is also a ramp. This current can be directly observed by a current transformer or via a resistive shunt which delivers a voltage image. The error voltage will now set the inductor peak current cycle-by-cycle and will adjust the value based on the operating point: a high peak for a large output power, a low value in light-load conditions:

In this mode, you control the inductor peak current and indirectly the duty ratio \$D\$. If you operate the buck converter in voltage- or current-mode control, \$D\$ will be identical between the two converters for a similar operating point. It is only the way this duty ratio is elaborated that changes between the two. This time, you must monitor the inductor current cycle-by-cyle and it provides inherent protection to the converter.

Below is a quick summary between the two techniques and each bullet is a subject to expand in itself : ) You can have a look at my last small seminar on the subject: