I have a 10-bit DAC that outputs a voltage between 0 and 3V. This signal controls a current-driver for LEDs. I need to compensate the "log" behavior of the LEDs, otherwise the first steps are too much different than the last ones. And, more important, the very first step (1 LSB of DAC) is way too intense for the project requirement.

The straightforward option is to increase the resolution of the DAC (i.e. 16-bit) and change the curve in the firmware. I know how to do, but I'm looking for a different solution.

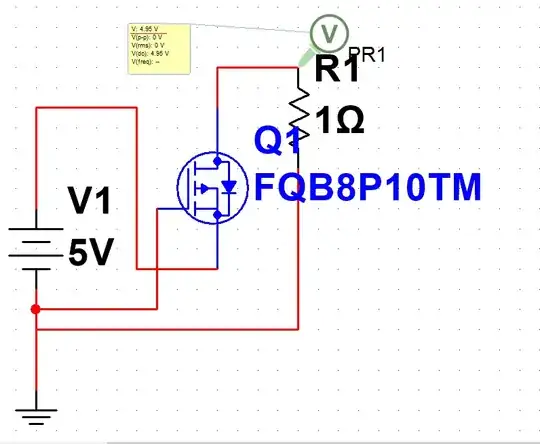

I would like to do the same in hardware, using an op-amp for a anti-log operation. A rough simulation of the basic circuit:

give me this result:

The region between 0.3 V and 0.7 V is the one I'm looking for:

But there are several problems:

- until the diode begin to conduct the output is 0 V regardless the input voltage

- the exp curve reaches the negative rail too early

Instead, I want a nice curve that smoothly increase its value and reach the negative rail (or even better the -3V) when the input signal is at 3V. This is mandatory in order to use the whole DAC output range.

I tried to put a resistor in series or in parallel to the diode but (as expected) it just combines the two behaviors.

How should I change my circuit to achieve this transfer function?

Ideally, the best it to fine-tune how far the curve differs from the linear. It's easy to do in firmware, but I'm trying to do this in hardware.