I have an analog circuit that takes a ±15 V DC voltage and some input signals, does some things to the signals and outs an analog signal via an op-amp. I would like to limit the output voltage to ±10 V to protect circuitry down stream. I am considering using a rail-to-rail op-amp, supplied with ±10 V to do this. Is there any reason not to do it this way? Or should I just stick with diodes?

Sorry if this is a duplicate question. I searched the site, but couldn't find this exact question.

Update:

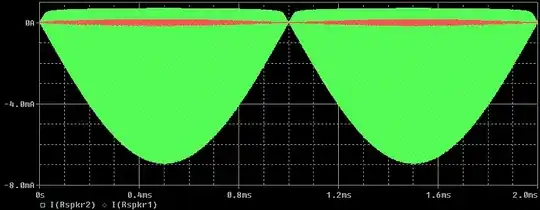

Thanks for all the great responses. At least one person asked for a schematic. Below is partial schematic showing the last part of the circuit (there could be IP in the rest of the schematic). w123 is the signal input. It is averaged through an active RC filter and is sent to three op-amps with different gains (final amplification to be determined. May be 1x-100x). The idea being that we can select an appropriate gain based on the signal (kind of like selecting a magnification on a microscope). When the signal is low, the high-gain output can be used, when it is high, the low-gain op-amp can be used. I don't plan on using the signal close to the rails: I just need to limit it to protect a multiplexer and an ADC down stream (partial schematic below).