I've been scratching my head trying to find a solution to what I imagine should be a fairly simple problem. I used Ohm's law to calculate what I believe should be the correct resistor value for a simple LED circuit, yet the LED barely lights up with a much lower amperage going across it.

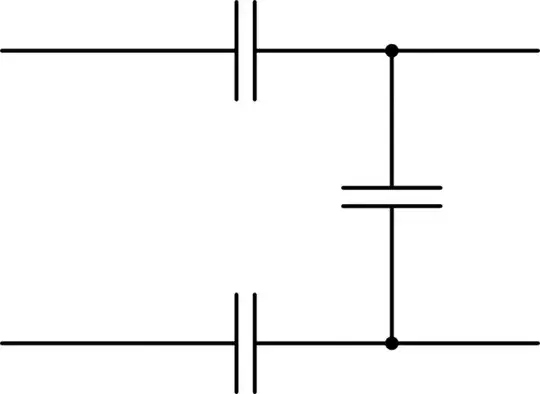

I have a random LED that I don't have datasheet/ spec. information for. I used a potentiometer connected to 5.3V as a voltage divider, which connects to the LED like so:

simulate this circuit – Schematic created using CircuitLab

I gradually turn the potentiometer knob, slowly increasing voltage from 0V till I got a nice comfortable shine at 2.0V across the LED.

Question 1): Is the voltage across the LED at this point called "forward voltage?" If so, is this an accurate way to measure forward voltage assuming I'm comfortable/ sensible with the brightness being produced by the LED?

At 2.0V across the LED, the amperage across the LED is 10mA, (0.010A). Now I have 2 variables, 5.3V that I will be powering the LED with, and a target 10mA I'm aiming for across the LED. Now I calculate the resistor I need by using Ohm's law: (5.30V / 0.010A = 5,300R).

Question 2): Is 5,300 Ohm the correct resistor I need in order to send 10mA across the LED if it's powered by 5.3V?

Now I find the closest resistor I have to this value: (5,100 Ohm). Now I recalculate the Equation with this new resistor to make sure the current is close to the desired current. (5.30V / 5,100R = 0.010A); looks good to me so far.

HOWEVER... When I run the LED at 5.3V with a 5,100 Ohm resistor, I only get a current of 0.64 mA across the LED, and the light is very dim.

Question 3): Why is this happening, and what am I doing wrong?