Is this assumption sound? Is it a frequent practice? Or is it an awful idea?

It's a sound idea and frequent practice.

It needs modifying here, though. The STWD100NYWY3F Watchdog Timer (WDT) has a 32..100 kilohm pull-down resistor on the /EN pin that you want to drive and that must be taken into account. But that can also be used to your advantage, forming half of a potential divider to drop some of the voltage.

You have a 5 V source signal and a 3.3 V /EN input pin.

I'll assume that the source signal comes from a logic gate and which drops 0.2 V max. (0.1 V plus margin) at the sub-100 uA load it'll have. That covers all 5 V TTL families from mid-1980s HCT onwards.

I'll also assume that the 5 V supply regulator has a tolerance of 5% (0.25 V) which cancels out the logic gate drop, so the max. source signal voltage is 5.05 V and the min. is 4.55 V

The /EN input must be at least 0.7 VCC and I'll assume a 3.3 V supply regulator of 5%, giving a VEN(min) of 2.43 V when VCC is +5%.

However, I'm not considering the series resistor tolerance. You can calculate that further based on any tolerance preferences you/employer have.

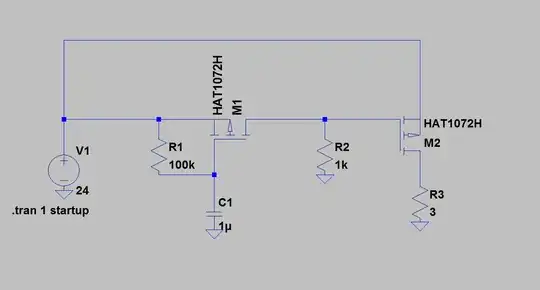

Therefore you can use a series resistor and a clamp diode, as shown in the schematic below.

When RinEN is 33K, using a 27K series resistor would drop 4.55 V to 2.5 V. That's good.

When RinEN is 100K, that 27K series resistor would drop 5.05 V to 3.98 V. However, a BAT81 diode's 0.2 V drop will clamp that to VCC + 0.2 V which is within the /EN voltage range.

simulate this circuit – Schematic created using CircuitLab