What is the difference between the quantization noise and quantization error in ADC?

I understood that the quantization error you get when you convert analog to digital and quantization noise when you convert from digital to analog.

What is the difference between the quantization noise and quantization error in ADC?

I understood that the quantization error you get when you convert analog to digital and quantization noise when you convert from digital to analog.

The quantization noise is an abstraction, meant to represent the quantization error as a signal (so it can be compared to other forms of noise.

You consider the quantization noise as the difference between the (real) quantized signal and the (ideal) sampled one. Because of the loss of information due to quantization, a signal that is A/D and then D/A converted will show an additional noise due to quantization.

A situation in which using quantization noise is useful is when determining the quantization depth (number of levels/bits) of a signal. By comparing the quantization noise to the other noise sources, it's possible to determine the maximum reasonable number of levels for the quantization, because additional bits would be absorbed by noise.

This of course happens if the sampling rule is respected.

An very important aspect of quantization noise that has not yet been mentioned is that unlike some types of noise, it cannot in general be removed by filtering, but adding the right sort of noise to a signal before it is sampled can cause change the character of the quantization noise in such a way that much of it can be removed.

For example, suppose one were to repeatedly measure the length of a board to the nearest inch. The first measurement comes out exactly 53", implying that the board is almost certainly somewhere between 52.5" and 53.5" [if there's some measurement slop, it might be something like 52.499" or 53.501"]. The next measurement also comes out to 53", as do the third, fourth, fifth, and a hundred more. One could measure the board a million times, and not really know anything more about its length than one did after a single measurement.

If instead of making many identical "accurate" measurements, one were to instead line up the measuring tape rather sloppily, but in such fashion as to be free of bias (so that on average the tape would be correctly positioned). The first measurement might come out to 52", suggesting that the board is probably between 50" and 54". Not as informative as one precise measurement. The next measurement, however, might come out to 54", suggesting that the board is probably somewhere between 52" and 56". Putting the two measurements together would suggest that it's probably between 52" and 54". Still not as good as a precise measurement, but getting better.

The key observation comes that if the random slop one has added has the proper uniform distribution and is free of bias, the total of 100 measurements is 5,283", that would suggest that the board is probably somewhere around 52.83" long. In practice, random slop will often have some unwanted bias, so the average of a thousand measurements may not be any better than the average of 100, but it would likely be better than the average of any number of "precise" measurements that all report 53".

If one knew one were taking ten measurements and could add bias systematically, the optimal approach would be to add 0.05" to the first measurement, 0.15" to the second, 0.25" to the third, etc. up to 0.95" for the tenth, and then always round down to the next lower inch rather than rounding to the nearest. The average of all those measurements would be than the actual length, accurate to within +/- 0.05". In practice, it's often difficult to be so systematic. If one didn't know how many measurements one were going to take, and no way of knowing whether a given measurement was the first, second, third, etc. one could randomly pick an integer uniformly from 0-999 and shift the measuring device by that many thousandths of an inch before each measurement. That would cause a single measurement to have an error of +/- 1.0" rather than +/- 0.5", but the average of repeated measurements would converge on the correct value. Unfortunately, that approach isn't quite so simple as it sounds.

In particular, the added-uniform-error approach works well if the added error is uniform and precisely spans the space between possible values. If it doesn't, it's far less helpful. For example, suppose that one was actually adding an amount that was uniformly distributed between 0.1" and 0.9". In that case, values whose fractional part was between 0.0" and 0.1" would never get rounded up to the next inch, while those whose fractional part was greater than 0.9" would always be rounded up. Such values could not be resolved any finer than the nearest 0.1" no matter how measurements one were to take. If the added value ranged from -0.1" to 1.1", the situation wouldn't be quite as bad, but a value whose fractional part was exactly 0.1" would on average round up two times out of 12 rather than one time out of ten (so it would appear to be 0.167", and a value whose fractional part was 0.9" would round up ten times out of twelve rather than 9 out of ten (appearing to be 0.833").

Some "noise shaping" technologies use various approaches to generate an error signal which can be added to an input before sampling in such a way as to ensure that the average measured value will in fact equal the real value. The actual techniques used, however, will vary considerably with the application.

Quantization noise relates to AC signals - when a signal is digitized and then converted back to analogue, the digital steps that correspond to the digitization process can be seen on the analogue signal like a staircase running up and down the signal. This is an example of how q-noise can be regarded.

Q-error relates to the fact that an ADC cannot resolve an analogue signal closer than the nearest digital step. If it's a 12-bit ADC with an input voltage range of 0 to 2.5V the minimum step size it can resolve (in the analogue world) is 610uV i.e. 2.5V/2^12. This is a measurement error.

Both are related to step size and both are improved by using ADCs with greater resolution.

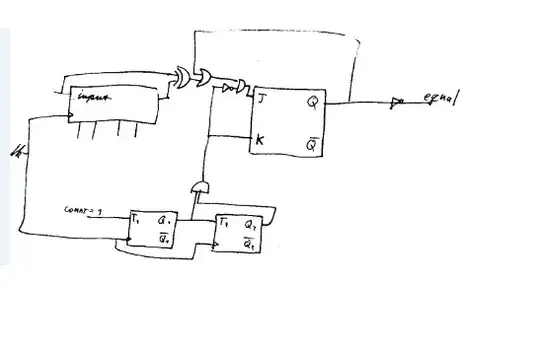

Here is a quickly drawn plot of a signal, the ADC steps, and the residual error (AKA residue - sawtooth waveform)

The quantization error is the residual error shown below, i.e. it answers the question of what is the difference between the signal and it's quantized value. It is interesting to note that this error at times can be precisely zero, this happens when the ADC representation is at the precise level of the signal (shown in integer values below), the residue (error is zero at these points) blue line crosses the red step.

The quantization noise is the probability density function of the random aspect of the signal interacting with the quantization error (residue). Is takes the form of \$ <Q_n> = \frac{Q}{\sqrt[2]{12}} \$. Where Q = the step size or DN of the ADC \$ Q = \frac{V_{range}}{{2^N}}\$ AKA (LSB). The actual error ranges from \$ -\frac{1}{2}*Q_n<Error<\frac{1}{2}*Q_n\$ the strange brackets <> means Expectation or predicted (rms) value, i.e. a noise term.

THe drawing below is ONLY illustrative and not accurate