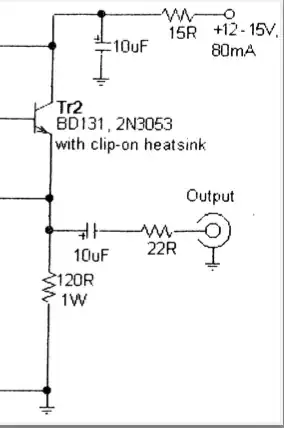

This circuit has a 15 ohm resistor in series with the 12-15v power supply line, just before the 10uF decoupling capacitor. (It is from an RF preamp design in "LF Today," a book for radio amateurs using frequencies below 1 MHz.)

I believe the resistor's purpose is to protect the power supply against an initial surge of current charging the capacitor. Is this correct?

This resistor would limit the maximum draw from the power supply to 1A under any circumstances, at the cost of dropping the supply voltage by about 1.2v in this case (assuming the 80mA value shown on the schematic).

I want to know how important it is to include this series resistor as practical matter. 10uF is not a huge value, and in fact I learned here USB powered device with multiple Decoupling Capacitors that 5v USB power supplies are expected to tolerate that much in connected devices.

If I was connecting this to a big 12v lead-acid battery, I think it would be justified in leaving it out altogether. But if I were to use an inexpensive 12v "wall wart" supply, what is the likelihood that the supply would be damaged by the inrush current to the 10uF capactitor?

Is this series resistor overkill, or is it generally accepted good design to always include a series resistor when using a decoupling capacitor in such circuits?