TL;DR: no, there's no such scheme that we wouldn't already be using. There's reasons, below.

Information theory tells us that we have to transmit the least bits (using less energy than transmitting more bits) if we use source coding to compress the input data – making 0 and 1 equally likely.

The job of channel coding is to then take these equally likely bits and find a transmission scheme that is optimal for the end-to-end system – typically optimal as in least bit error rate for a given transmit power, or least power needed for a fixed bit error rate. There can be many other parameters to take into consideration, but these are the main things we usually look at when we optimize channel coding for long-haul high-rate communications, which use the most power.

So, what you propose is "already done", and there's 80 years of extensive theory and practice in communications engineering going into it.

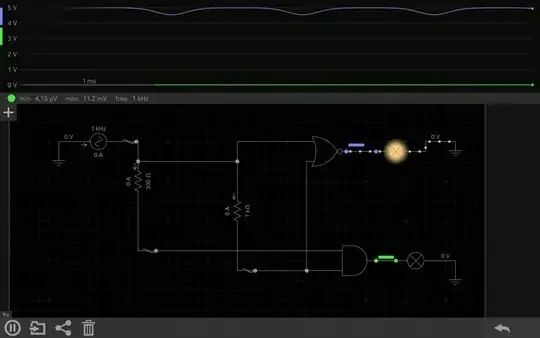

For example, we know that schemes who are off to signal one bit value and transmit something for the other are in almost all cases power-wise inefficient, really. The medium of transmission is an electromagnetic wave – be it the radio interface of your phone, be it the field between the wires in a twisted pair, or be it the optical fiber for >= 100 Gbit/s links. And these have a phase, which allows us to transmit, say, amplitudes of -0.5/+0.5 instead of 0.0/1.0, and get the same "distance" between noisy received symbols at the receiver. However, the average power used by the first scheme is \$0.5^2=\frac14\$, whereas in the second case it's \$\frac12\left(0^2+1^2\right)=\frac12\$. This BPSK (binary phase-shift keying) vs OOK (on-off keying) example serves to illustrate that there's beauty in making things symmetrical – and then, you lose the "bit that has lower energy" argument altogether.

Now, there's not only symbol sets that have a constant power; on the contrary, in high-rate communications, we do use sets that have very high ranges of different powers. However, if you start "shaping" the probability distribution of these symbols, you run into a problem:

Say, you had a constellation with 1024 different possible transmit symbols (1024-QAM, for example). If you simply take 10 input bits and pick the symbol with that number, your single symbol transports 10 bits of information! Easy. That also means every symbol is equally likely, as every 10 bit sequence of bits is equally likely.

Now, you come along and say, you want to optimize for power, so the higher-amplitude symbols should be occurring less often than the lower-amplitude ones. Turns out that under that condition, each symbols does no longer carry 10 bits; 10 bits per symbol is the maximum you can get across with 210=1024 symbols, and that happens when you choose the probabilities of all the symbols identically.

So, to transmit the same, say, 1 million bits, where in the equidistributed scheme you needed 100 thousand symbols, you now need more. How much more depends on how exactly you shape the probability¹.

Now, so to be more power-efficient per symbol you transmit, you need to transmit more symbols!

It gets worse: at the receiver, a decision which symbol you've sent has to be made. This gets significantly more involved when the symbols are not equally distributed. Receiver signal processing and channel decoding contribute significantly to communication power demand. With significant, I mean, easily up to half of the overall system consumption is spent in the receiver, not the transmitter, which has to bring the symbols physically onto the transmission channel!

So, this is a path that usually leads nowhere.

It does lead somewhere if your channel is not nice and linear, and higher signal powers lead to more distortion. This is what we see in highest-rate (think 400 Gbit/s upwards) fiber links, where you'll find probabilistic shaping used to maximize the mutual information between transmitter and receiver. It really doesn't apply to simpler use cases today, and the community has been pretty good at mathematically proving that the situations where it does yield a gain are really not these use cases with lower data rates.

¹ We actually have formulas to describe that: the maximum you could get out of a source \$X\$ with such shaped symbol set probability \$(P(x_i))_{i=1,\ldots,1024}\$ is the source's entropy:

$$H(X) = -\sum_{i=1}^{1024} P(x_i) \log_2(P(x_i))$$

With a bit of analysis you'll find that has a global maximum for \$P(x_1)=P(x_2)=\ldots=\frac1{1024}\$, as probabilities have to always add up to 1. The value of the entropy at that is \$H(X) = -1024\cdot \frac1{1024}\log_2\left(\frac1{1024}\right) = -(-10)=10\$ (bit).